Visual Studio Performance hub

01 Background

Visual Studio is fully-featured integrated development environment (IDE) for Android, iOS, Windows, web, and cloud. Visual Studio provides the most powerful and feature complete development environment, bar none. Visual Studio has 4 million users with 1.5 billion revenue per year.

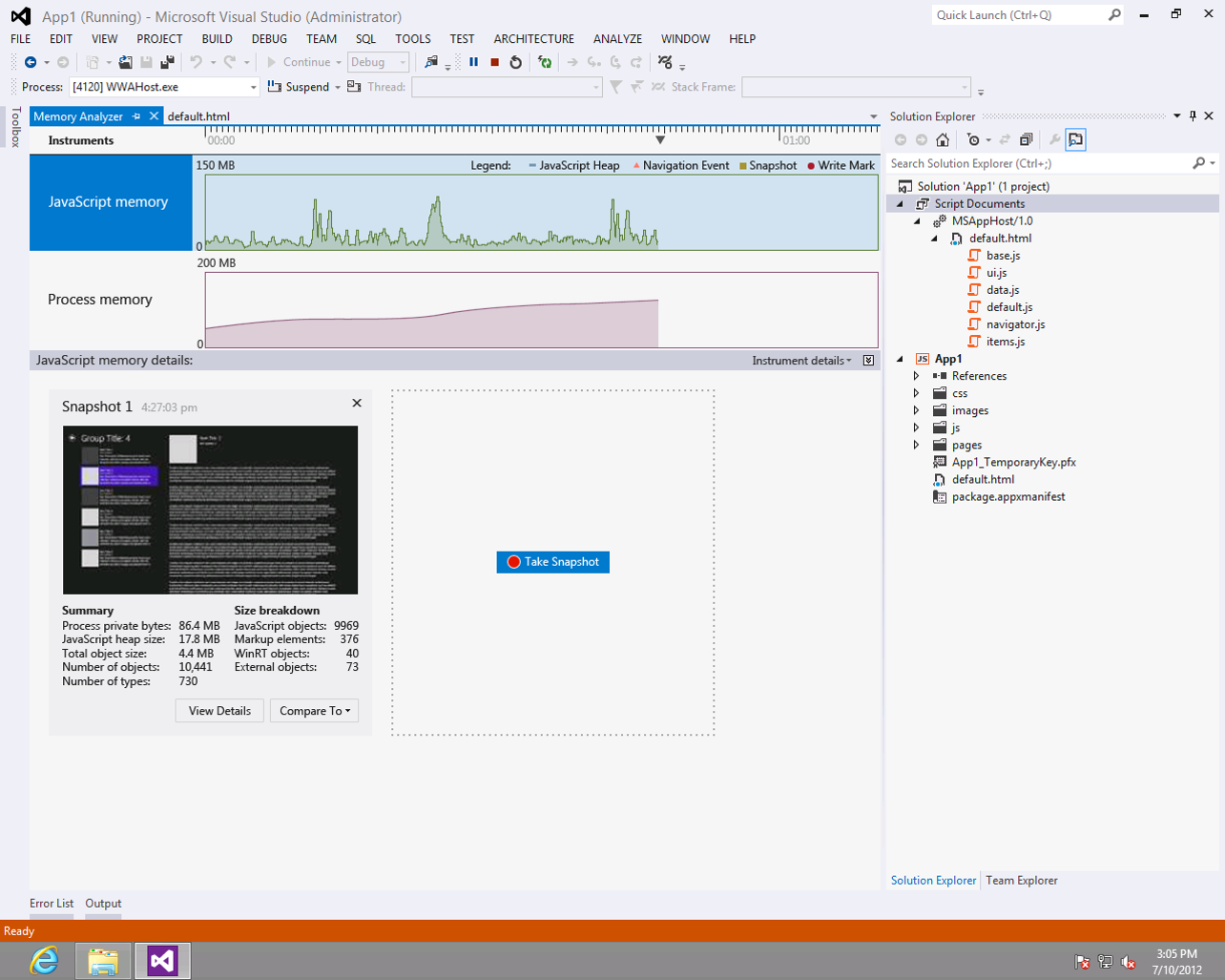

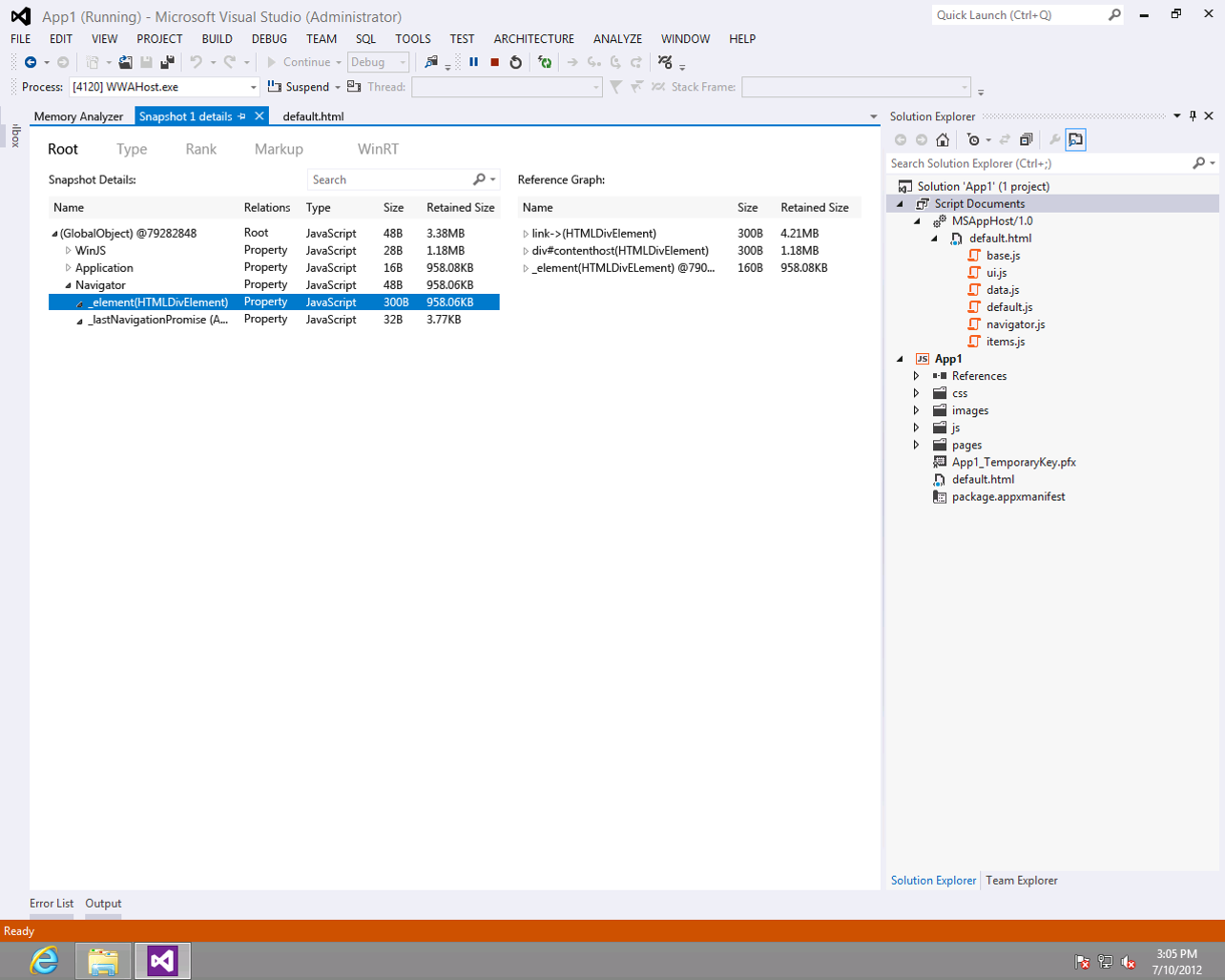

As the most powerful developer tool in the industry, Visual Studio have more than 20 powerful performance tools, including Debugger events, Memory usage, CPU usage, Network usage, GPU usage, graphics debugging, JavaScript Memory and HTML UI responsiveness, etc. These tools were created by different team in the past. The experience of these tools are different with one thing in common: it’s complex and hard to use. Our customers descripted performance debugging as “dark art” and only special people in their team knows how to do it.

COMPANY

Microsoft

TIME

2015-2016

ROLE

Lead Designer

02 Project planning

As the lead designer, I was part of the planning team for Visual Studio V-Next. The planning team included managers, senior PMs and senior engineers. We met regularly to discuss the plan for next release. Performance tools was one of the area which got most customer complaints. I worked with two PMs to explore the performance tools future direction. Yes, one of the reasons why our performance tools were hard to use was they did require deep domain knowledge about application architecture and performance. As a designer, I believe we can always create better experience to make our customers’ life easier no matter how complex the use scenarios are.

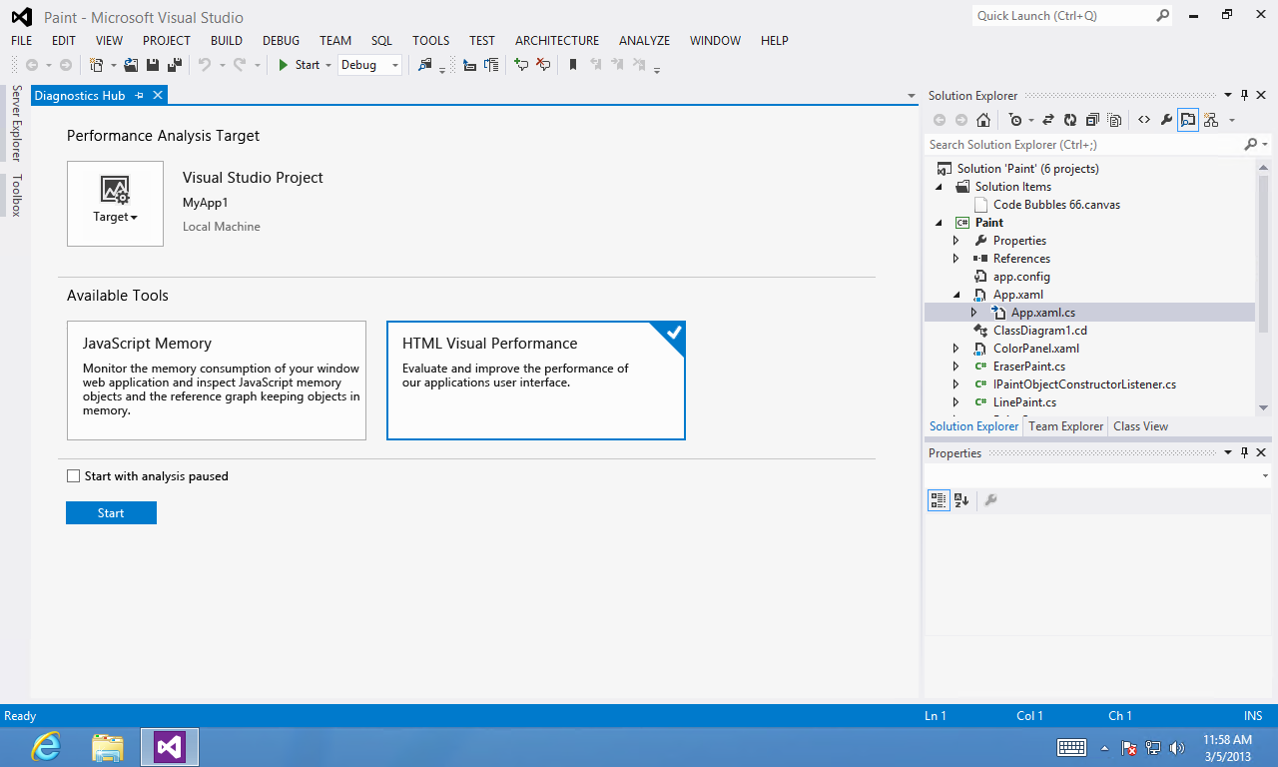

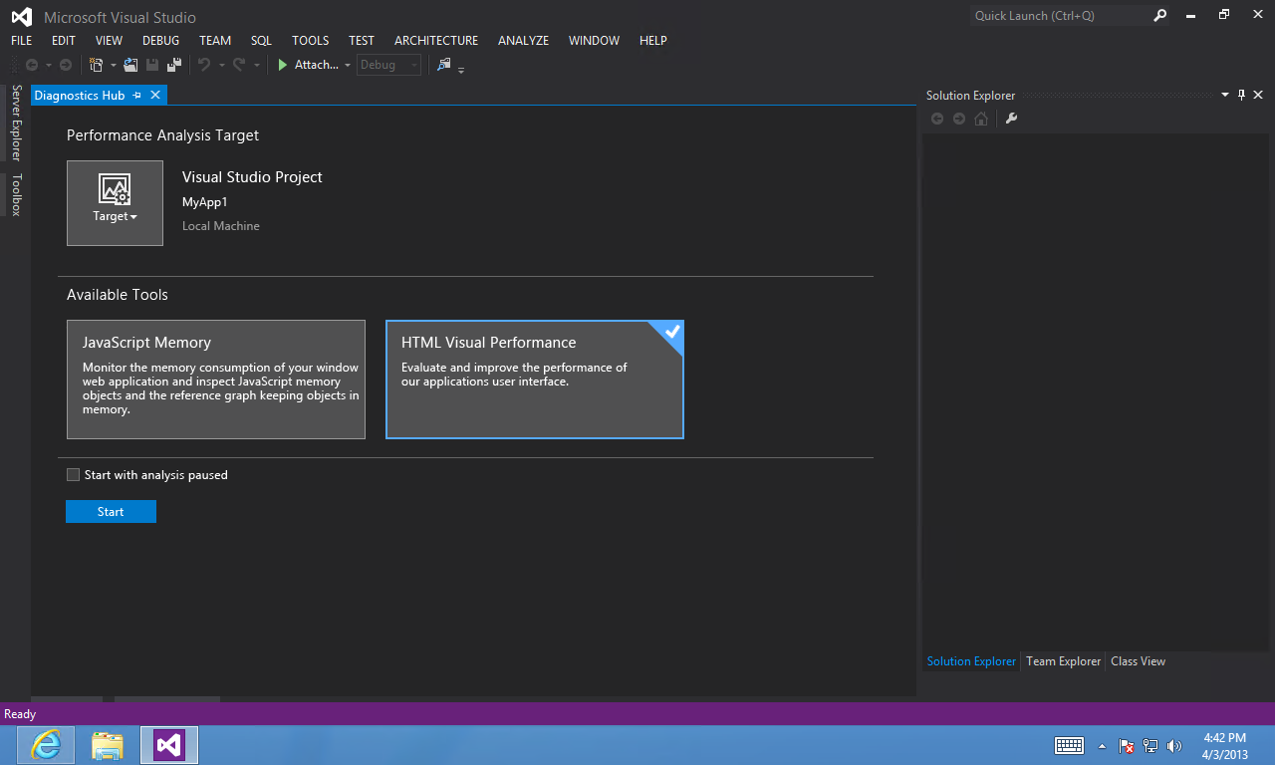

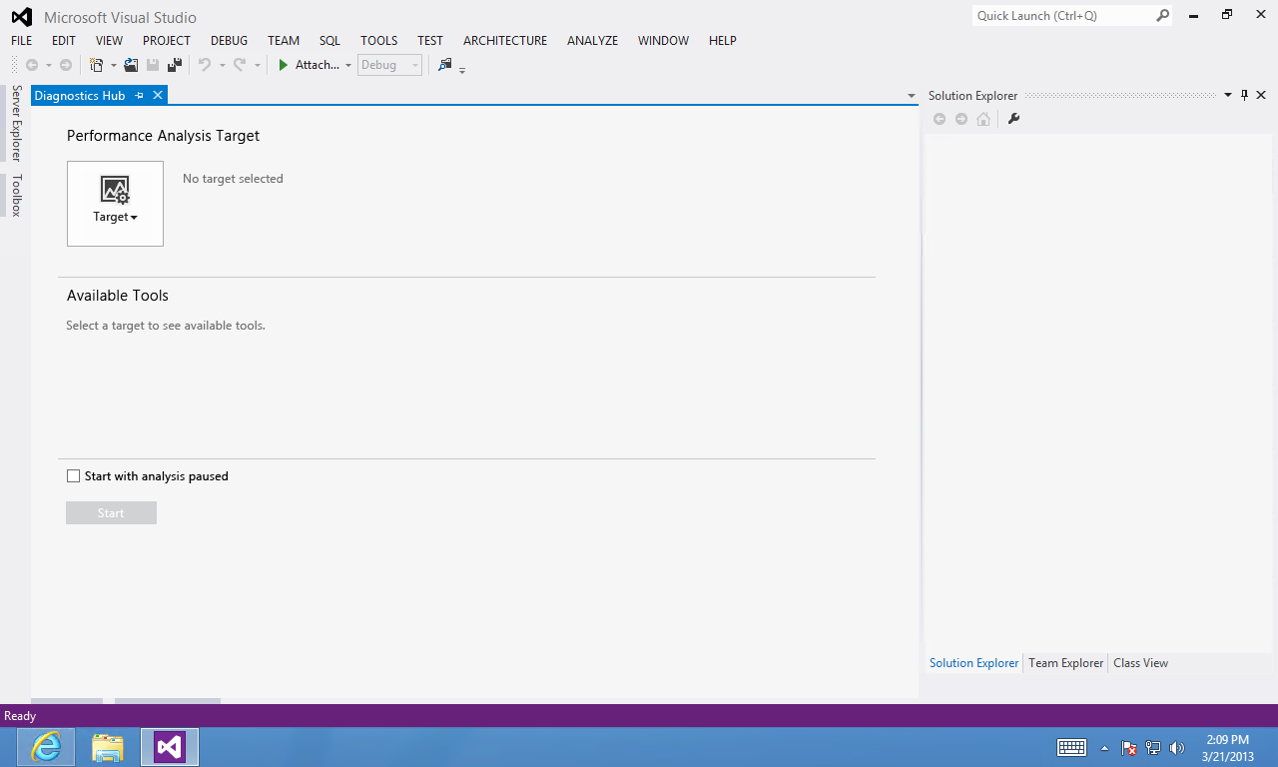

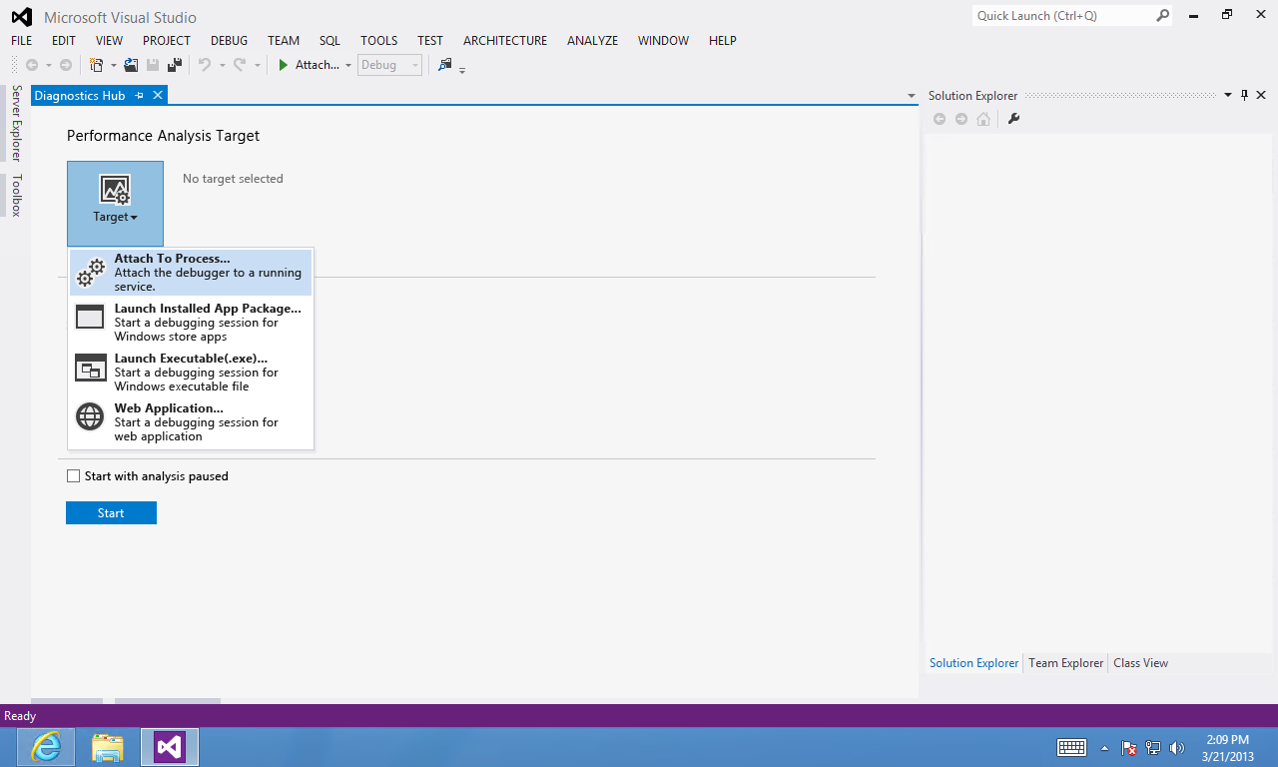

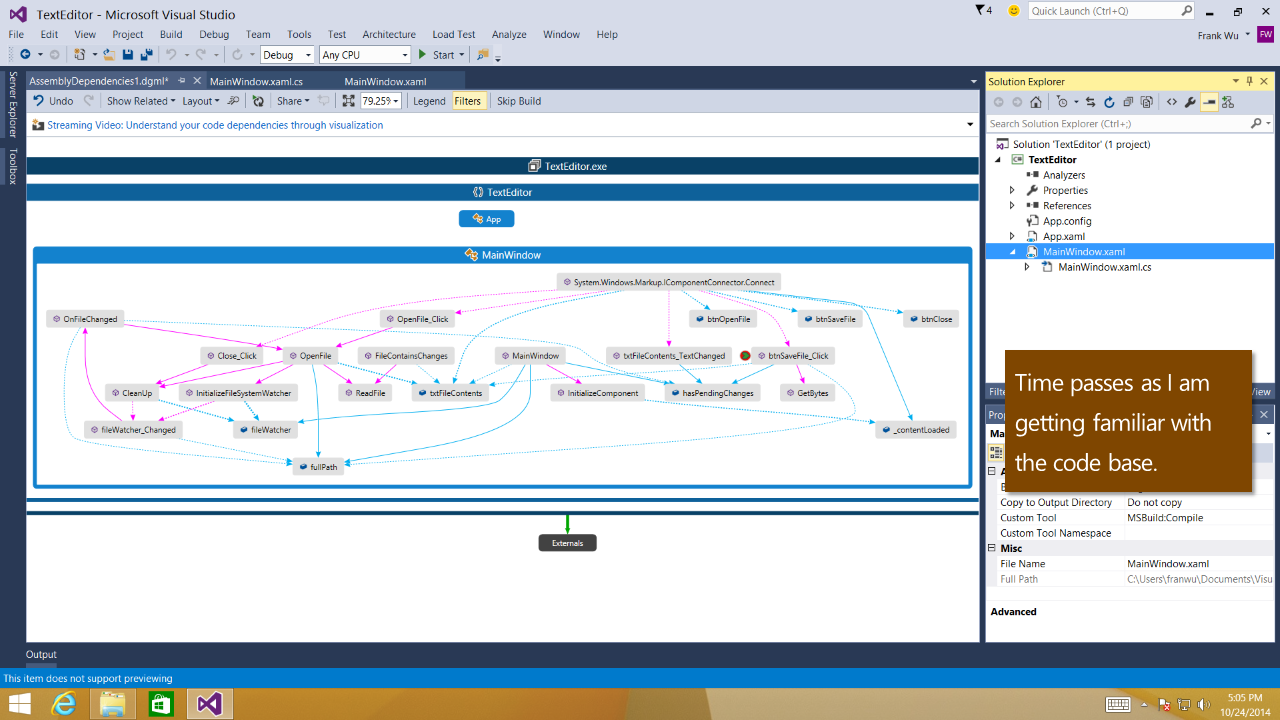

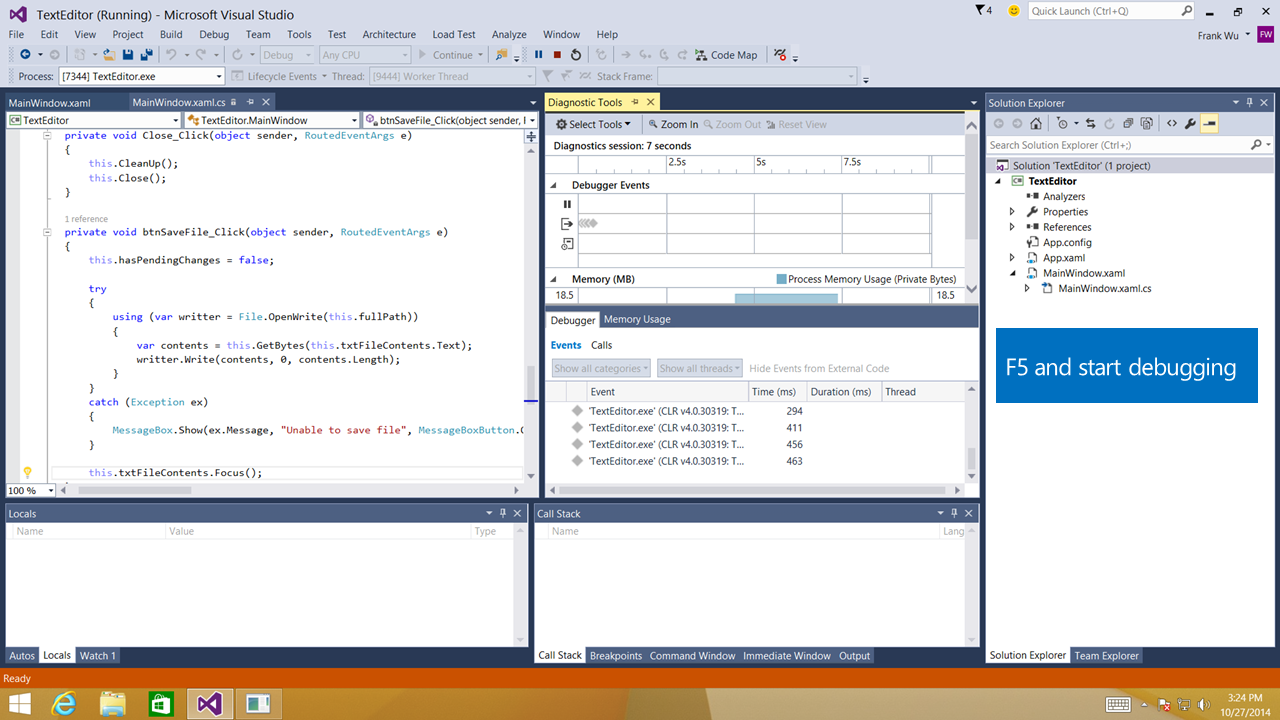

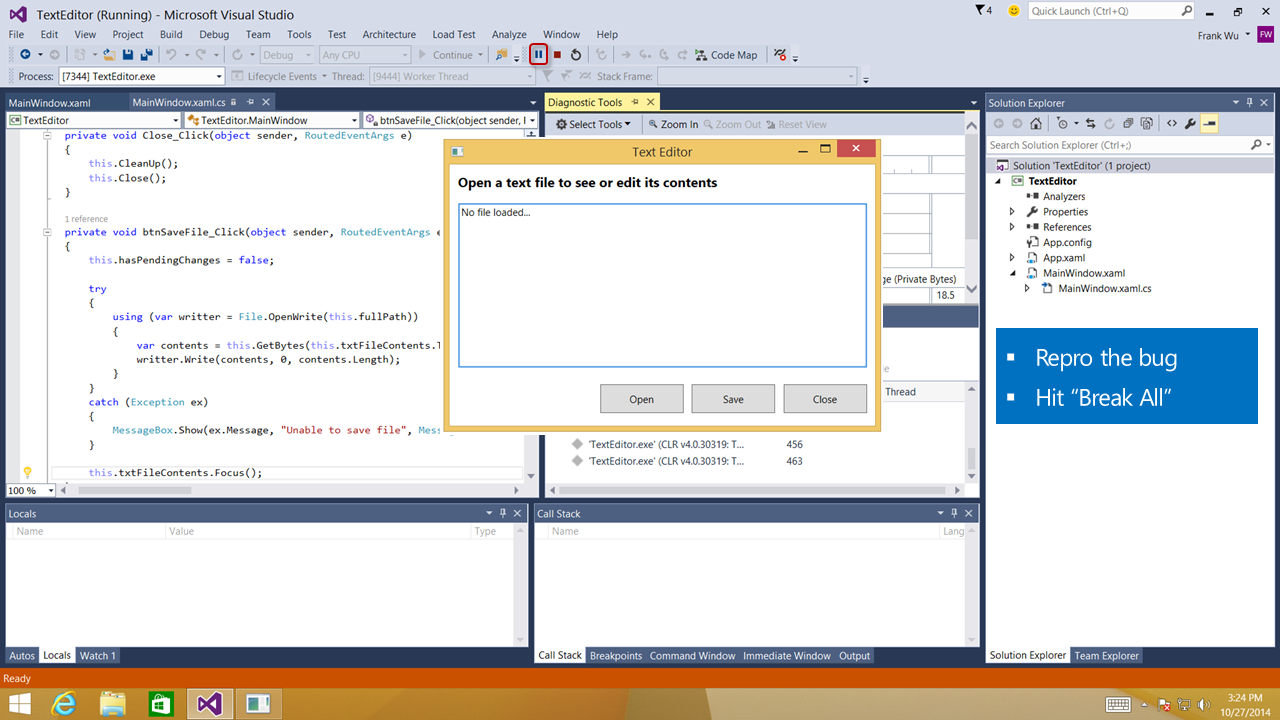

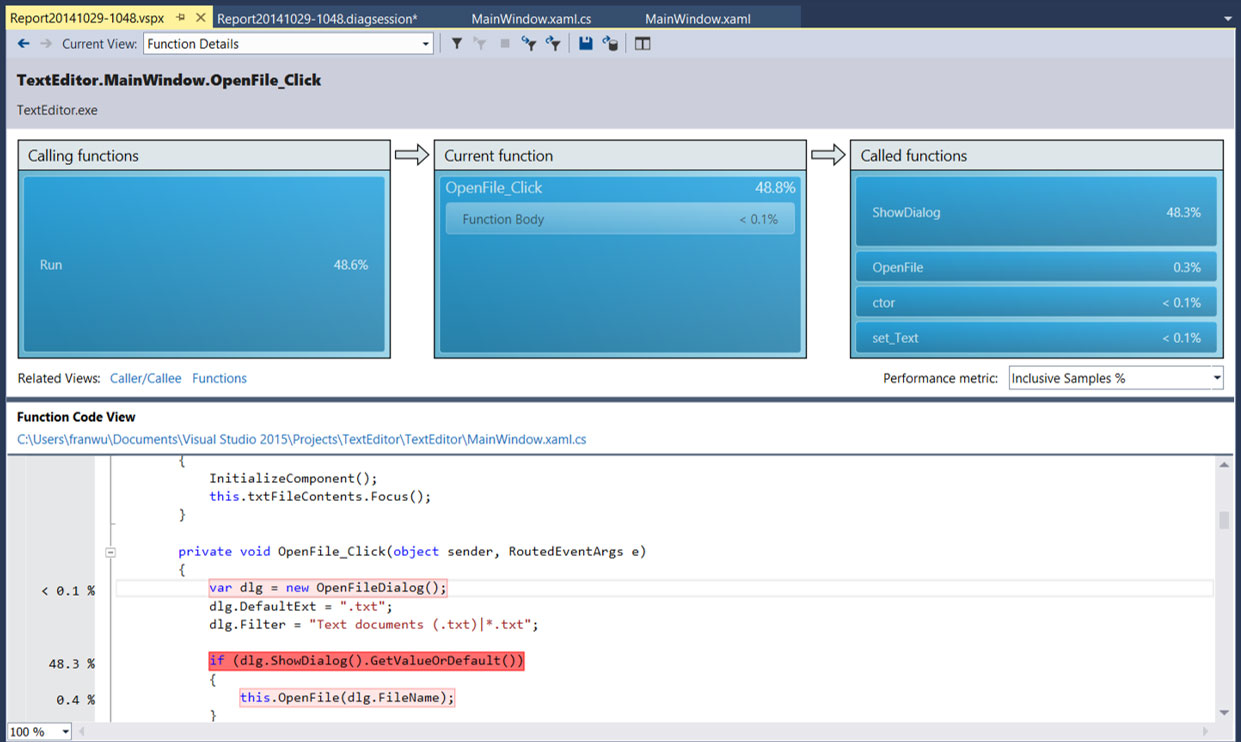

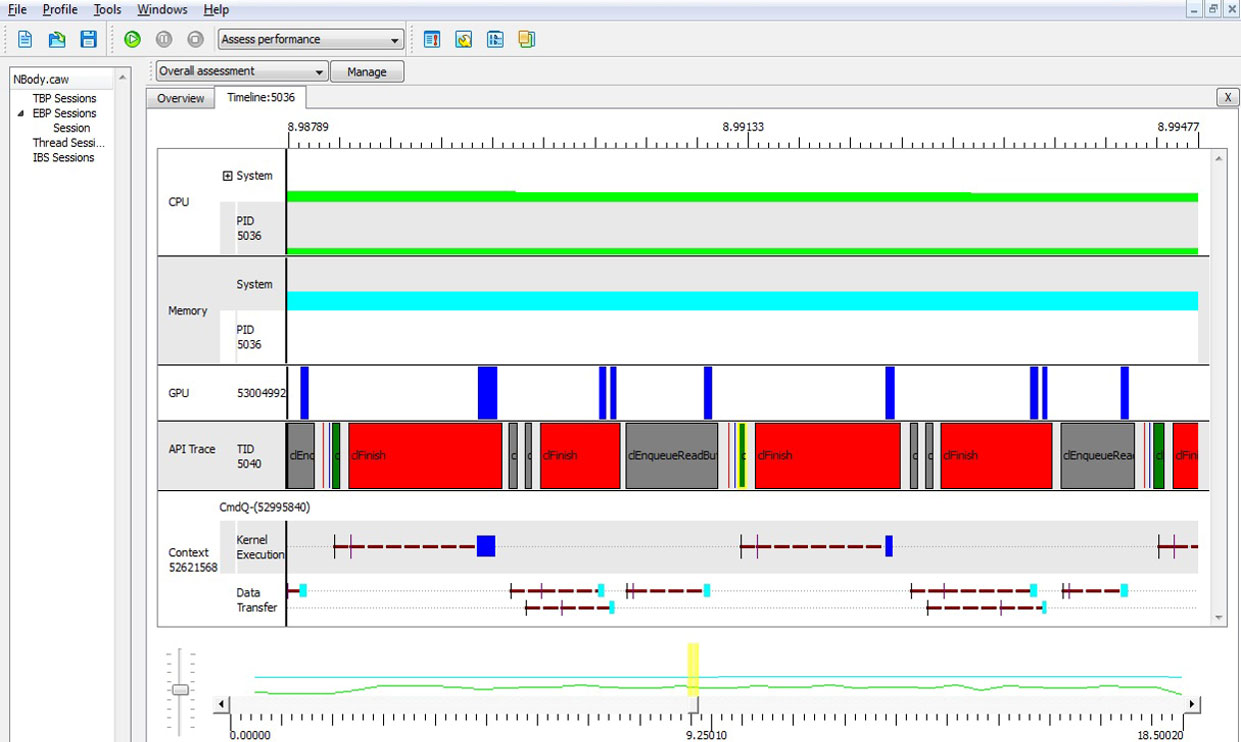

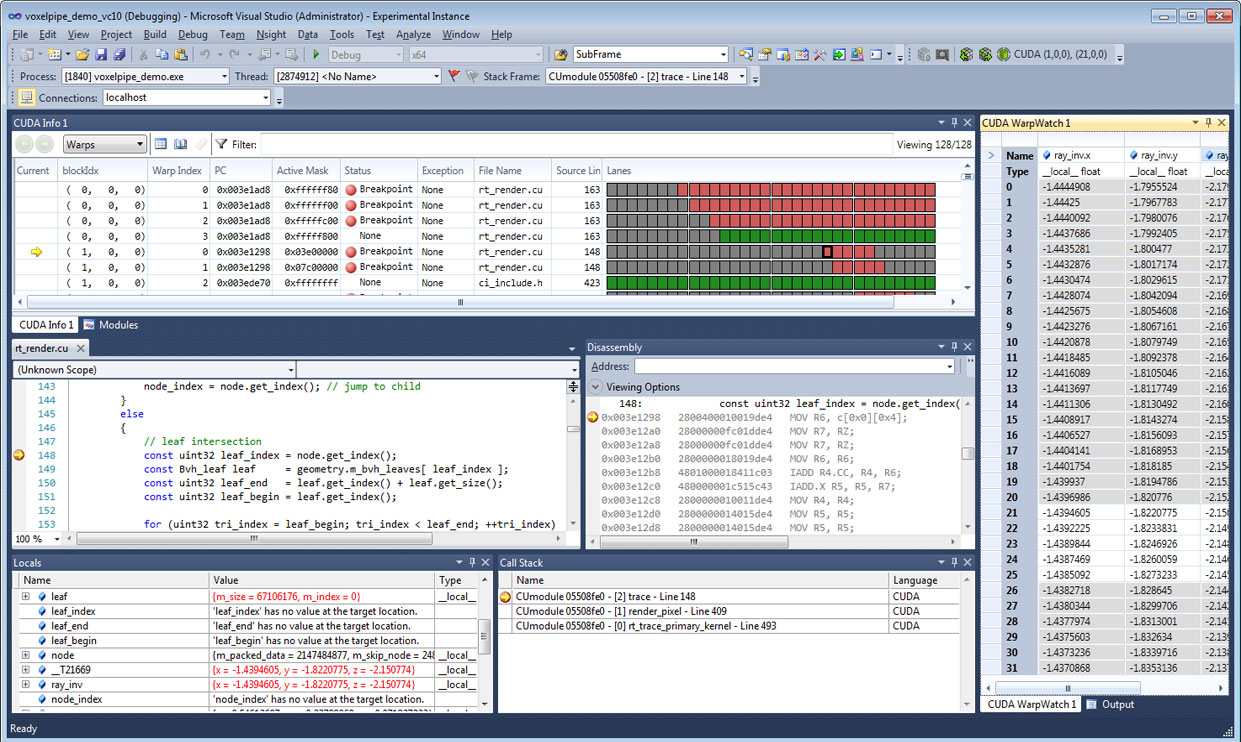

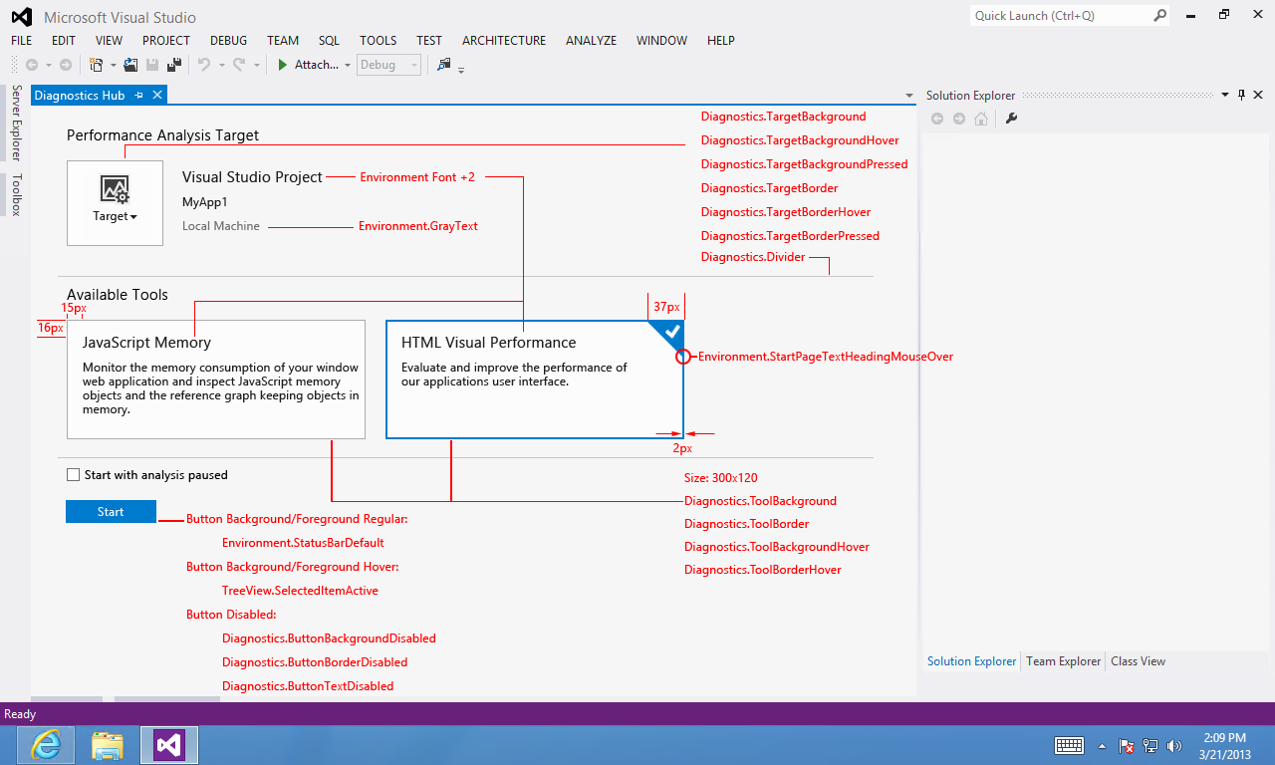

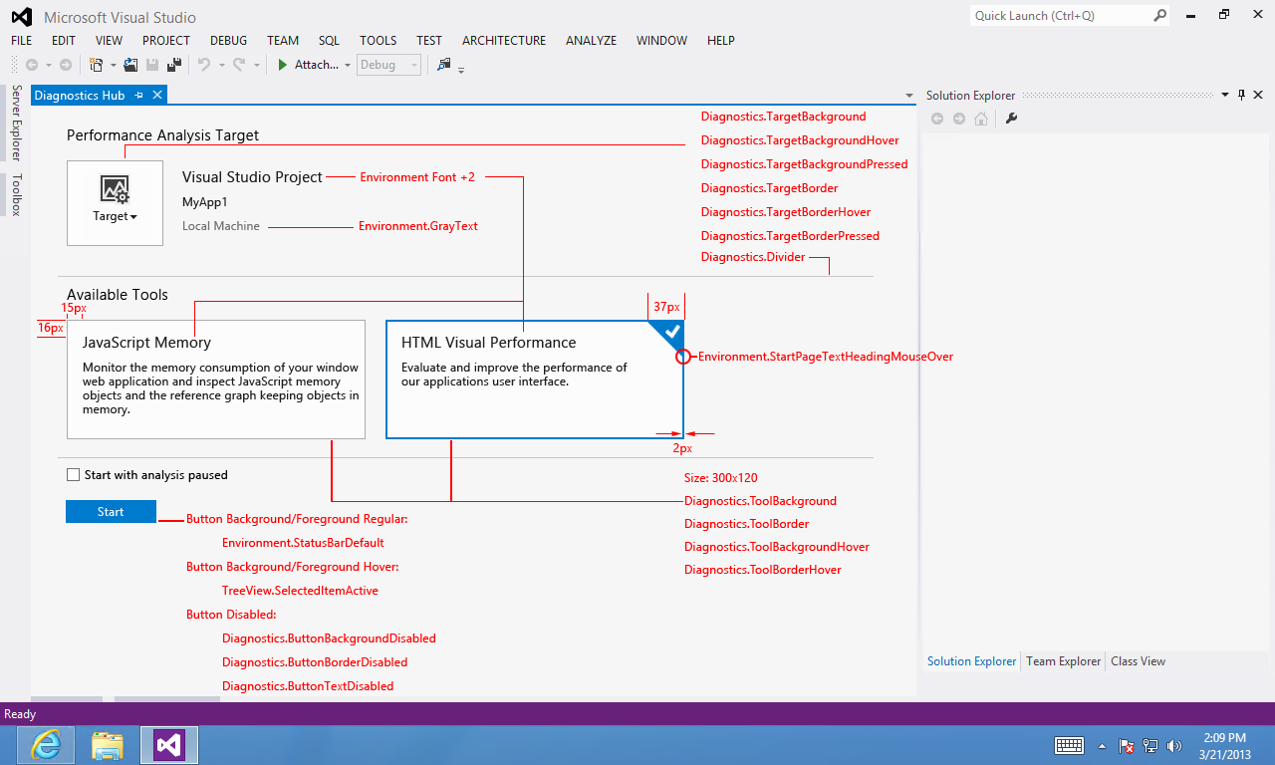

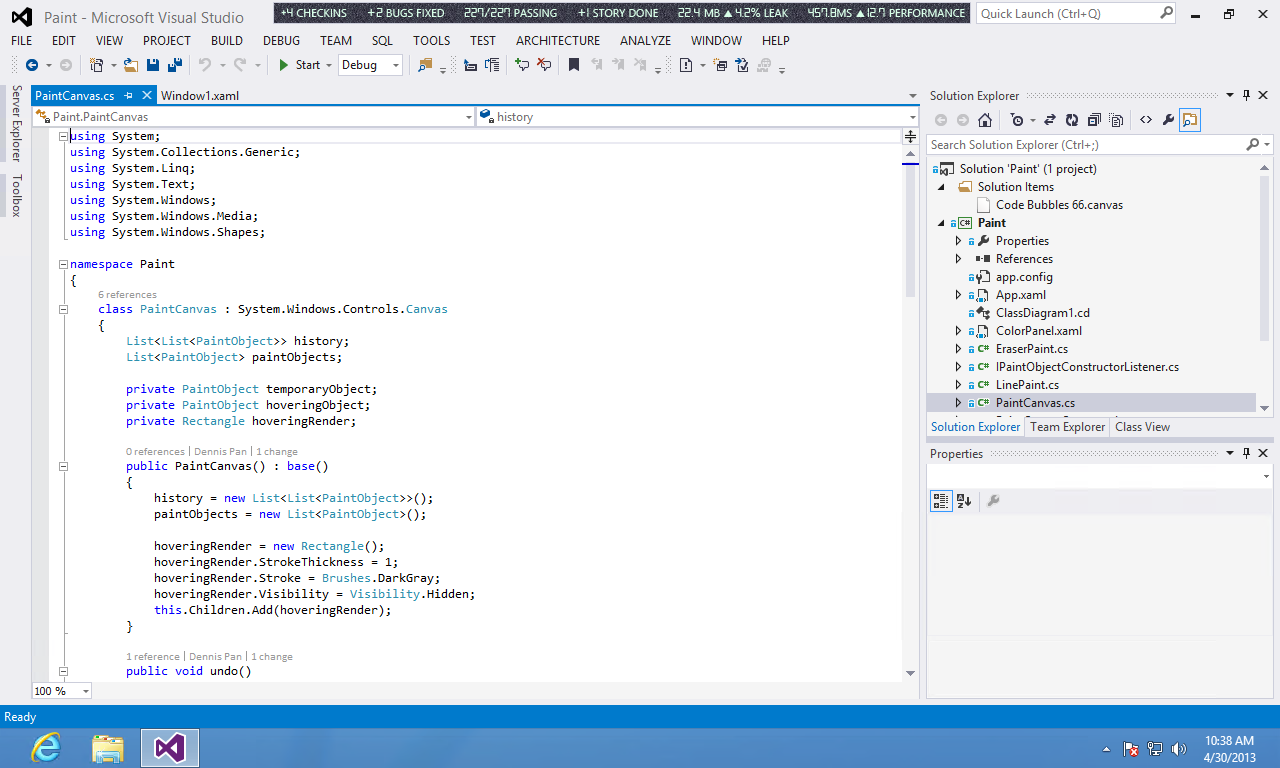

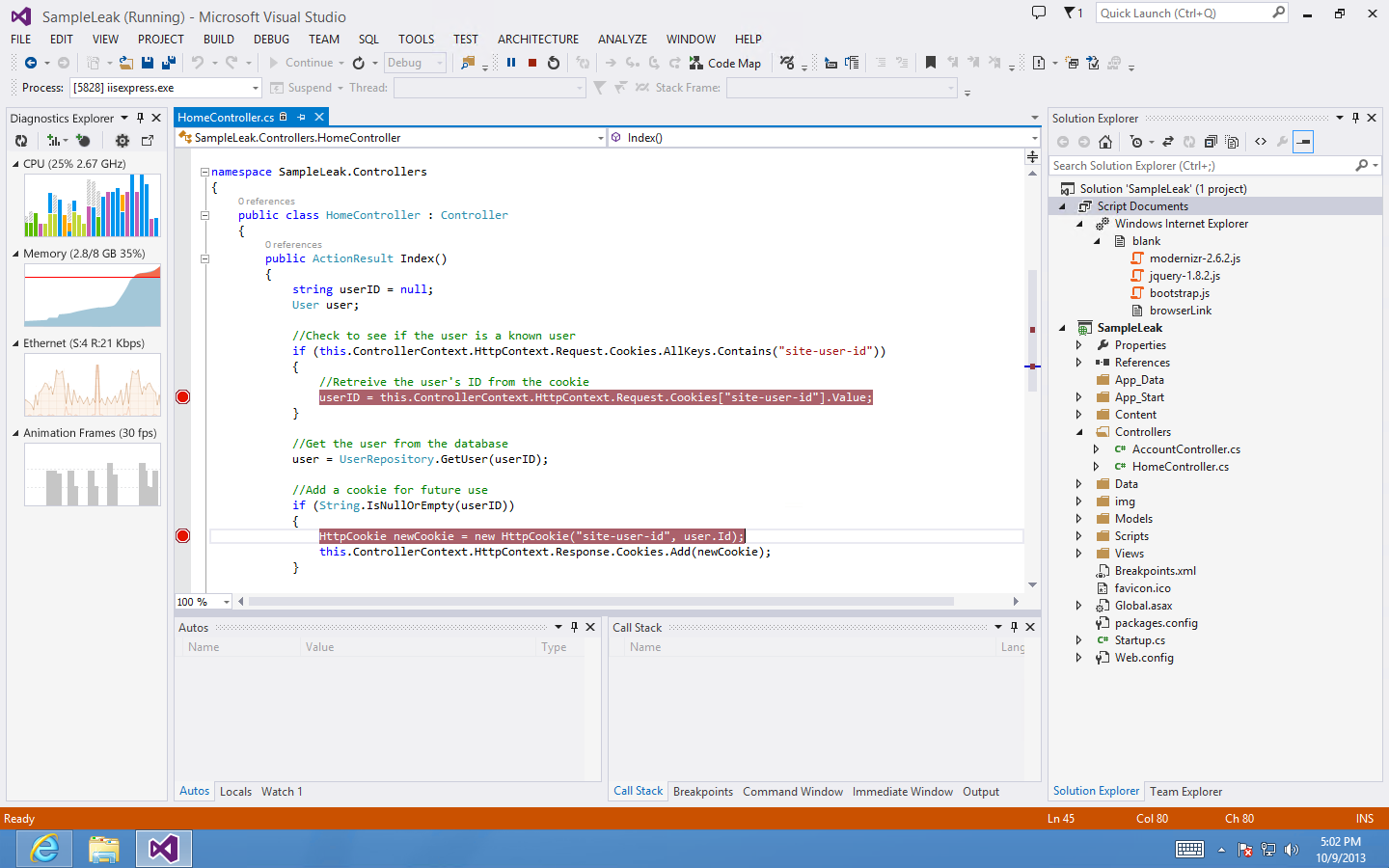

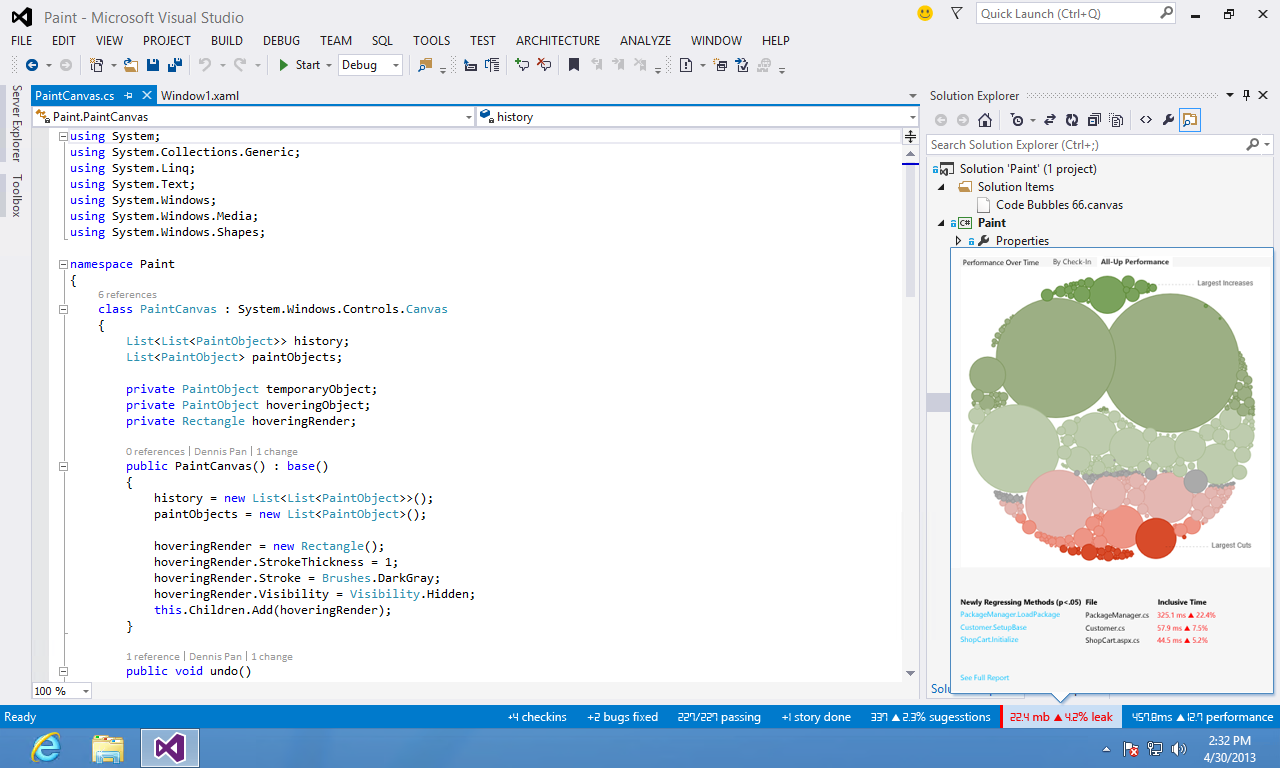

The first thing to explore was to review what we had today. The below are the screenshots of some performance tools we had.

03 Performance Hub V1

Because those tools were owned by different teams, PMs and I started to meet with different team inside Microsoft who own these performance tools. We invited stakeholders from different team and discuss how to improve these tools.

The top two concerns about these tools

- Poor discoverability. The users don’t know where to find these tools since they are all over the place.

- Inconsistent experience. The users don’t know how to use a new performance tool even they used other tools before.

All the stakeholders agreed that creating a centralized platform to host all performance tools will be the right direction. Then we discussed and investigate what the requirement for such a platform.

Target user

The target users for this effort is the senior/lead developers who had performance related domain knowledge and used performance tools before. We want to created a better experience for them.

Design Goals

“Create an extensible hosting environment for all Microsoft performance tools, that provides customers a single launch point and consistent analysis experience”

- Entry point

– Tools that support launching will be detected by the HUB and displayed as an option for the user - Real-time date Viewing

– Tools inside the HUB will be able to define views that allows the user to interact with the tool in real time. - Post mortem log viewing

– Tools will be able to open a analysis view after profiling.

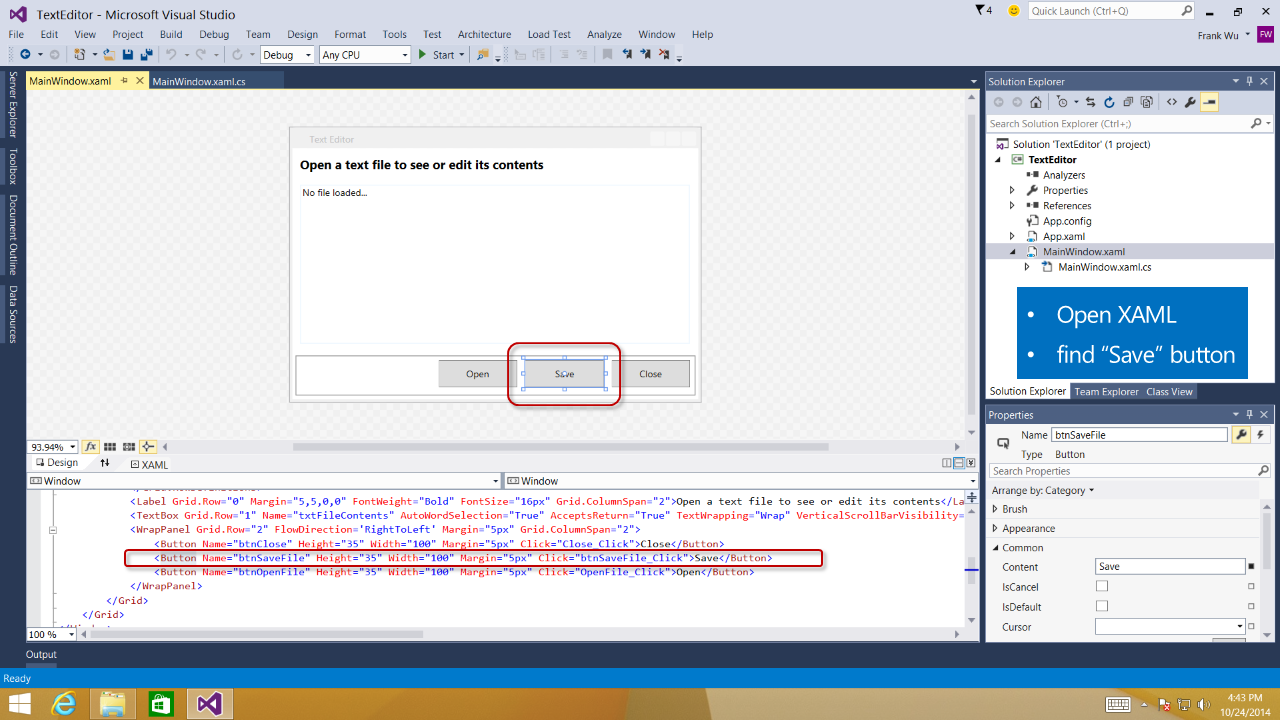

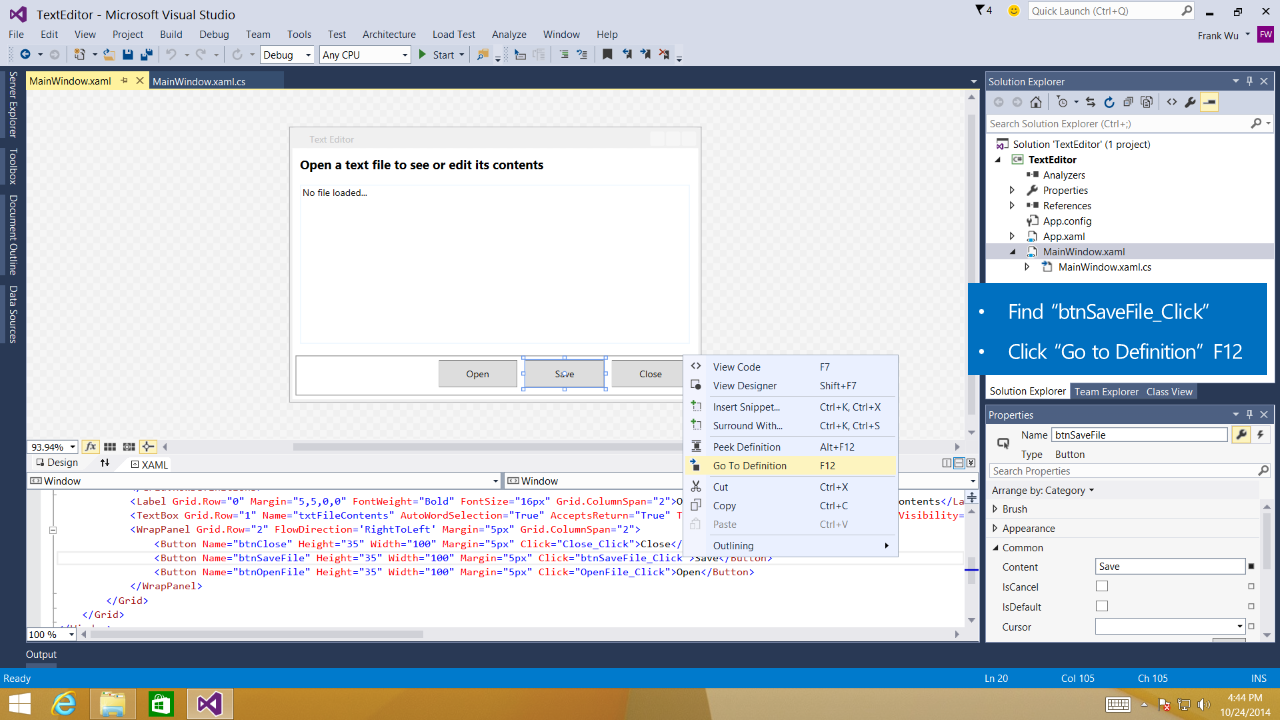

Early design and iterations

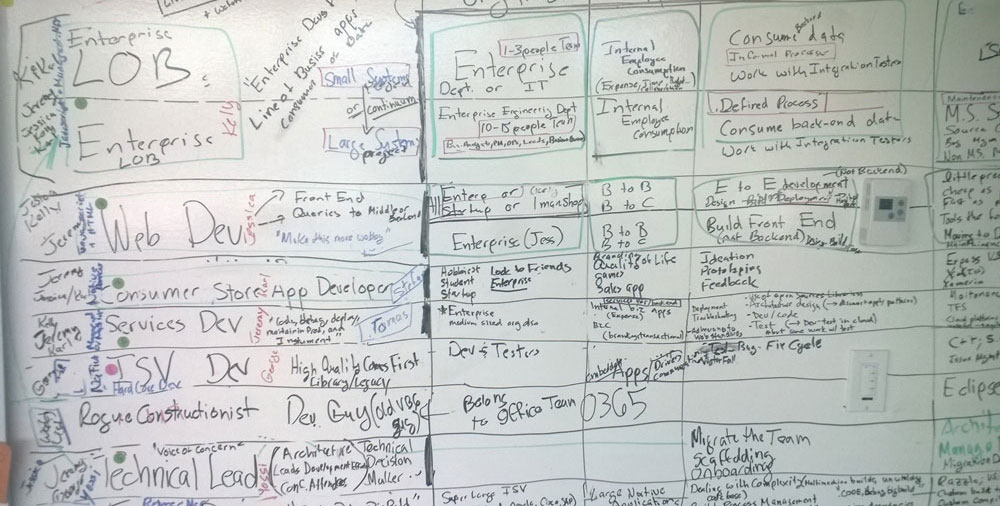

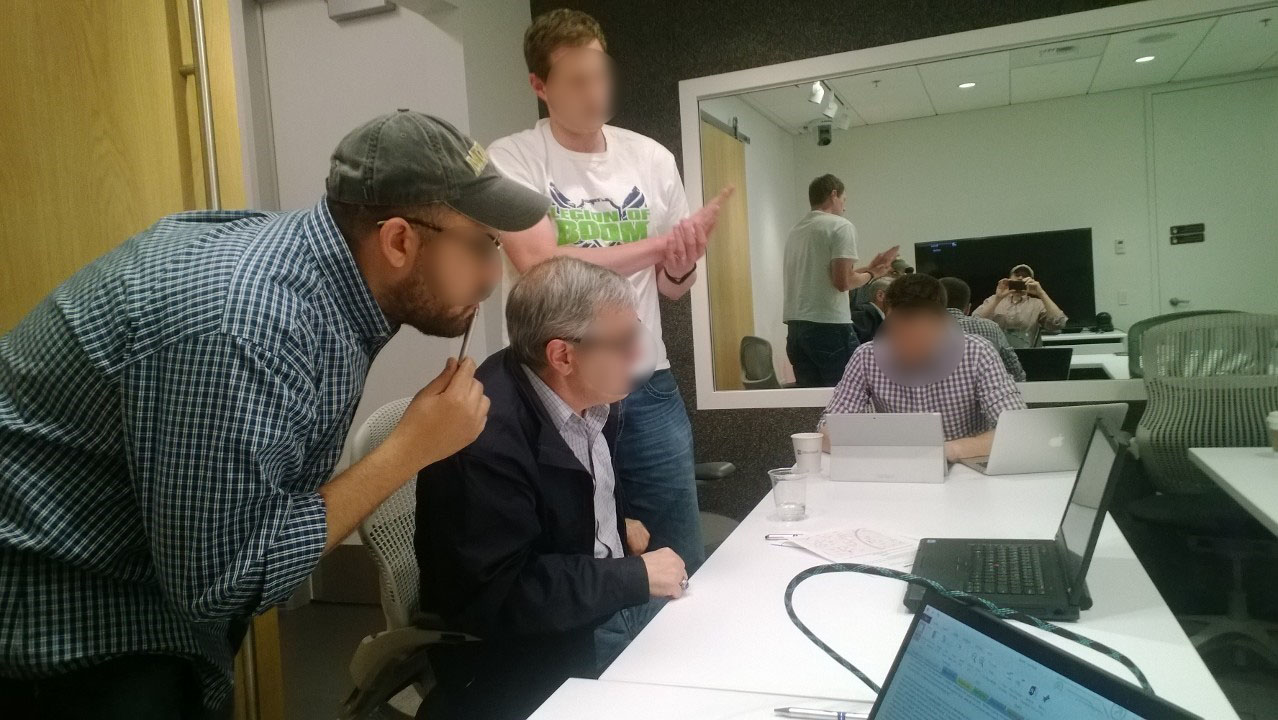

After we understand the problem we are trying to solve and set up a clear design goal, the next step was to brainstorm ideas for the new hub. We came out a lot of different ideas, and create paper sketch and wireframes for these ideas. The researcher verified these ideas with the users every week while other team members observed. One tough challenge is to communicate and test these ideas with both the users and different product teams. On one side, the users’ feedback was the key to validate a design idea. On the other side, this is a company wide cross-team project. I could not simply come to other teams, and say this is the design our users like and you guys have to do exact what I designed. Likely, there are almost always more than one design which the users like. So we iterated the design based on both the feedback we got from our users and our partners. Through a great effort of communication and collaboration, we came out the design which both test well with the users and got buy-in from all partner teams.

Final design

The below are the final work through of the Performance Hub V1.

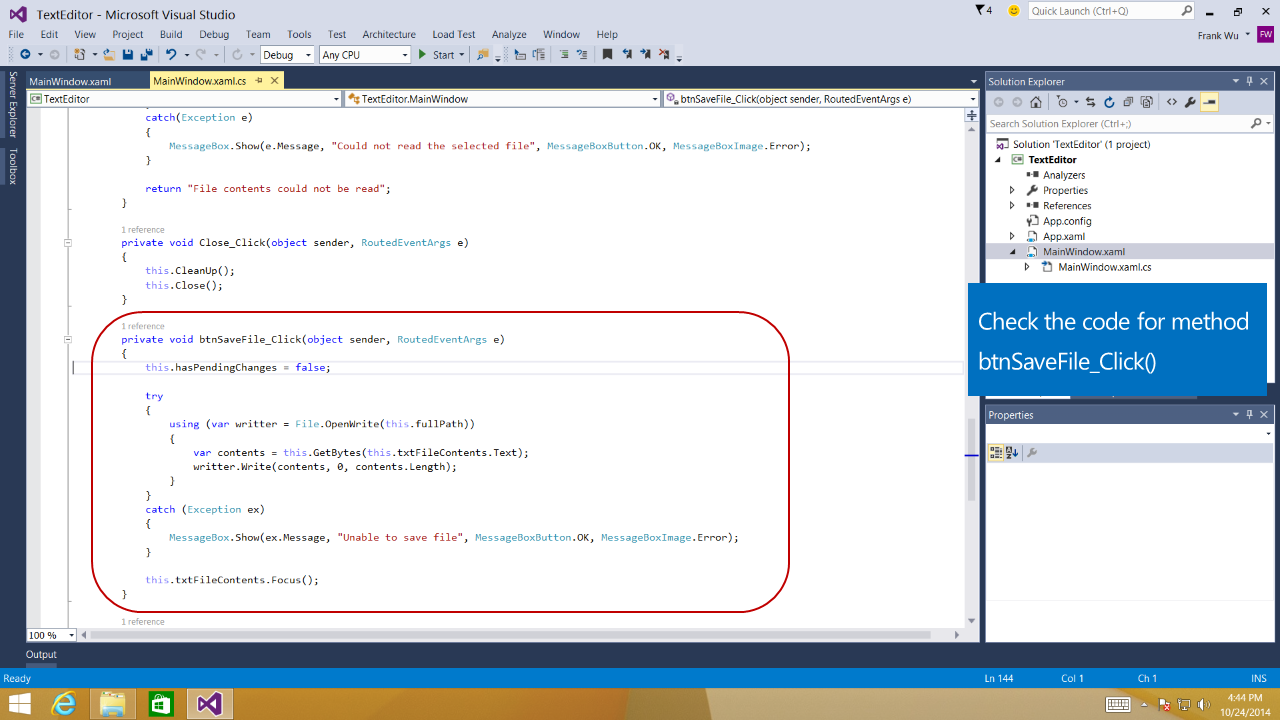

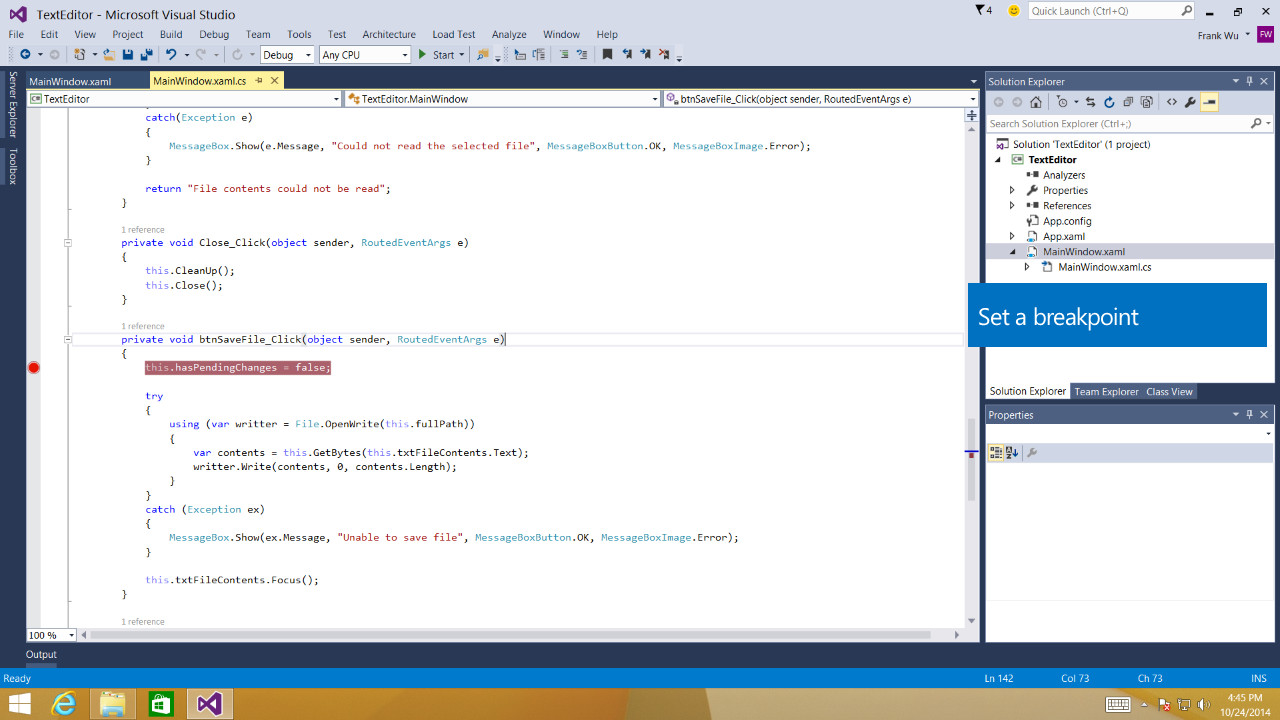

Redlines

After we finalize the design, I also created redlines and visual spec, and handed over to the engineers in my team. The engineers in my team implemented the framework which provided API and interface of both backend and UI elements for partner teams. The partner teams can use the API and the interface to plug in their applications into the hub. After the partner implemented their tools inside the HUB, I will review their implementation to make sure the user experience was implemented correctly.

04 Performance Hub V2

After releasing performance hub v1, we got positive feedback from both external customers and leadership. We have a much consistent and improved profiler tooling experience across for all Microsoft platform. But we did not stop here. As a designer, I believe there is always room to improve.

We want developers to build better apps. Performance issues are one of the last things that developers worry about since their primary focus is on building features into their app. However, performance issue are one of the keys to app’s success. Performance requires developers to learn a difference set of profiling tools. Today’s profiling experience are too disconnected from the code and that is what makes them hard to understand. Sometimes, when the developers do think about performance, it’s too late. They don’t have enough time to invest.

Target user

The target users for v2 are both the senior developers and normal app developers in the team. We want to make the tooling better for senior developers, meanwhile the junior developers can easily find out their performance problem earlier in their development cycle.

Design Goals

“Create a brand new performance debugging experience, which enable our customers to be a better developer”

Design Concept

We tried to create something new, something never exist in Visual Studio or even in the world. We brainstormed and came out all kinds of crazy ideas. The below are some examples.

User research

We looped in the users in a very early stage when we only have rough ideas. The researcher hosted a lot of interviews and co-design session with our customers who signed NDA.

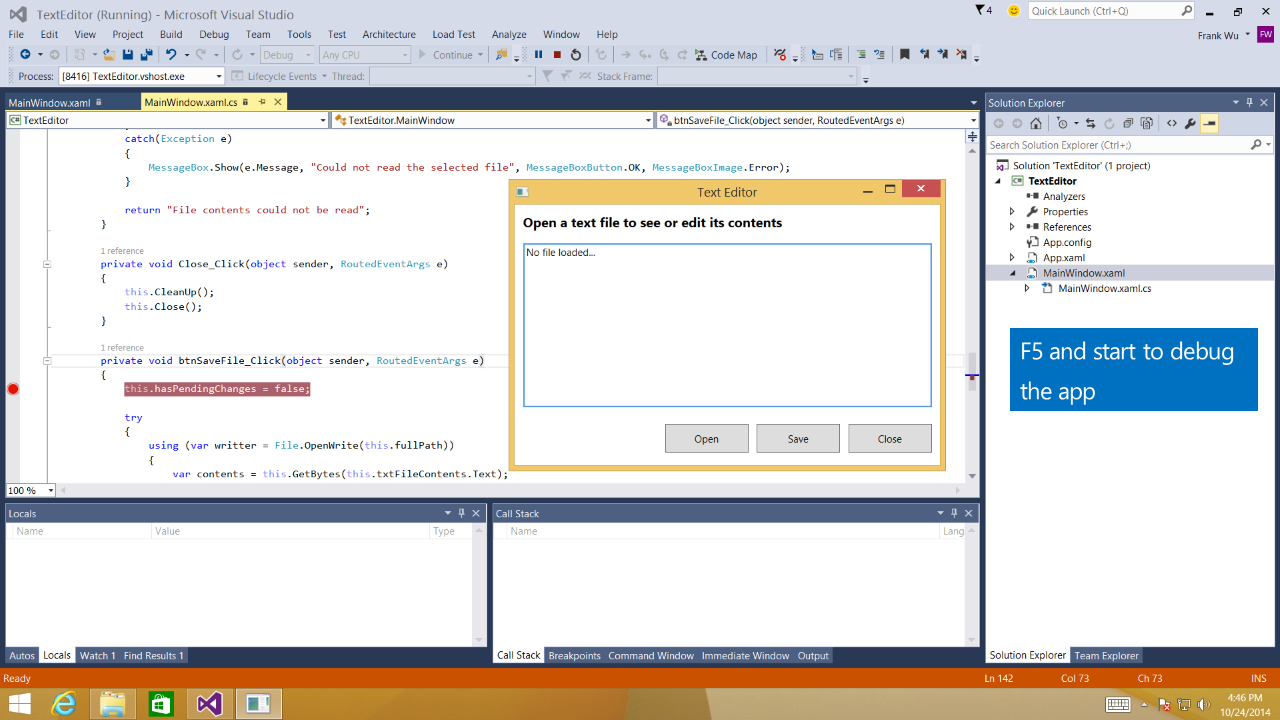

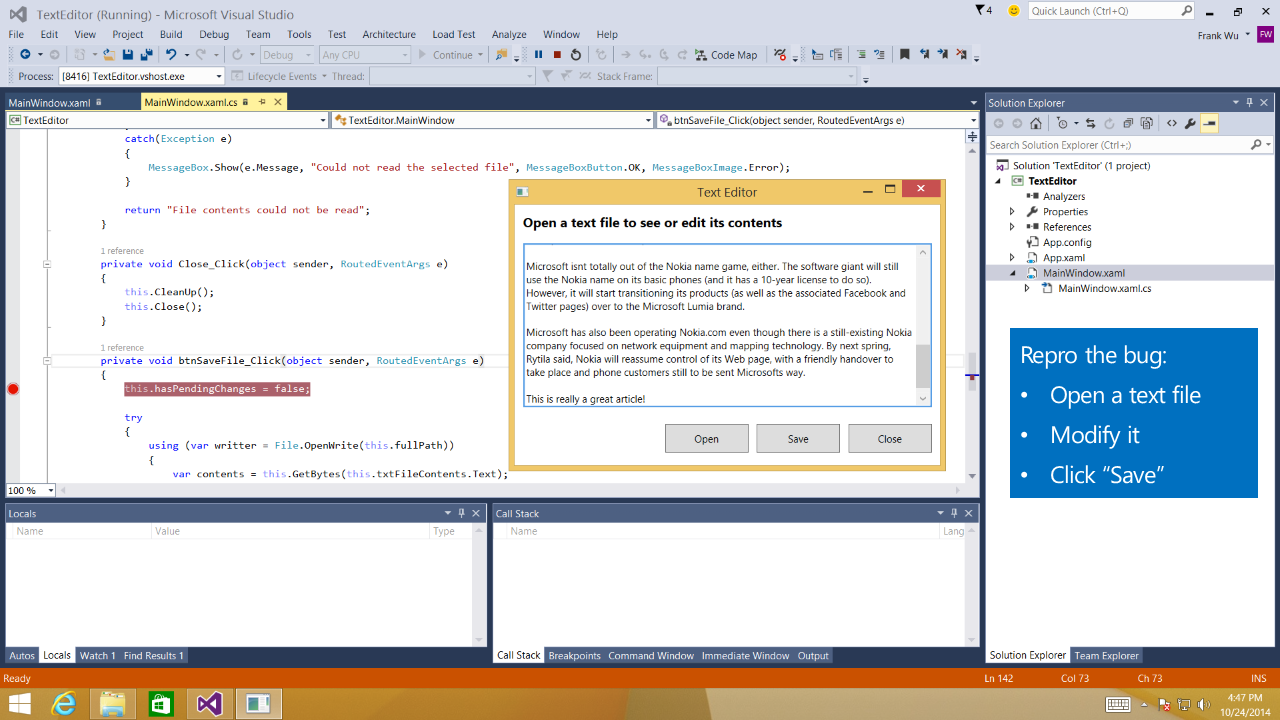

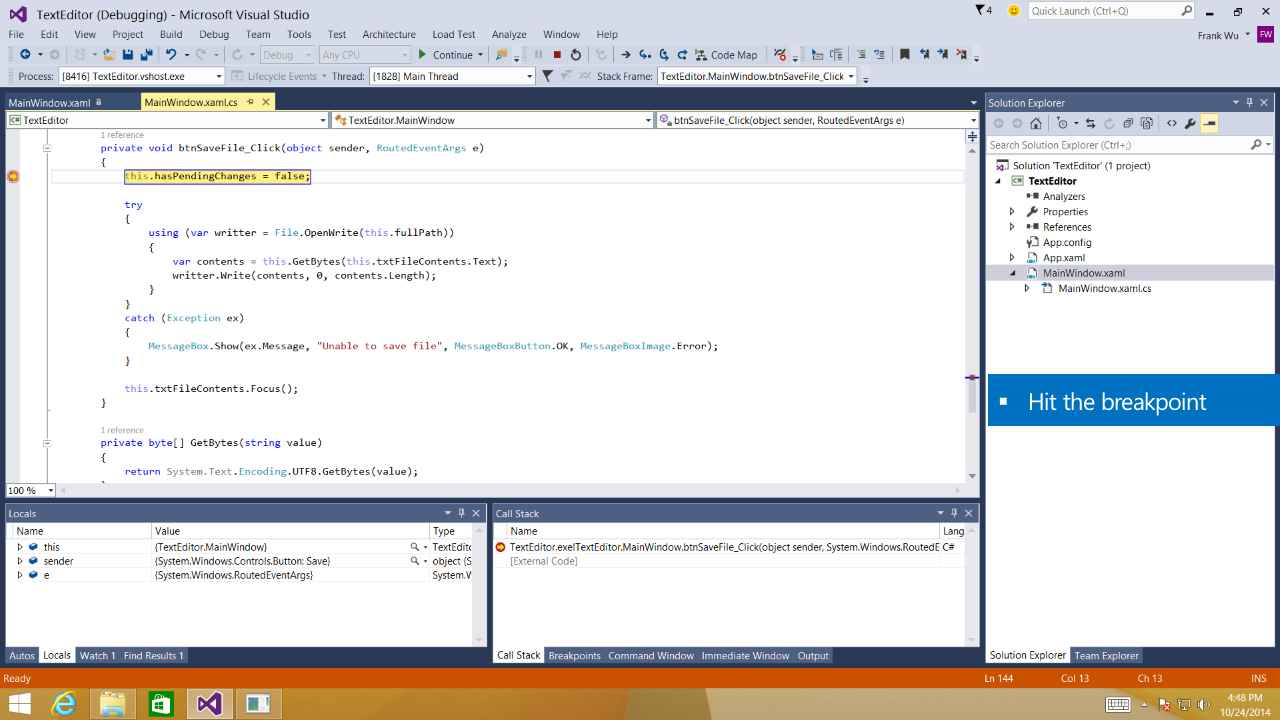

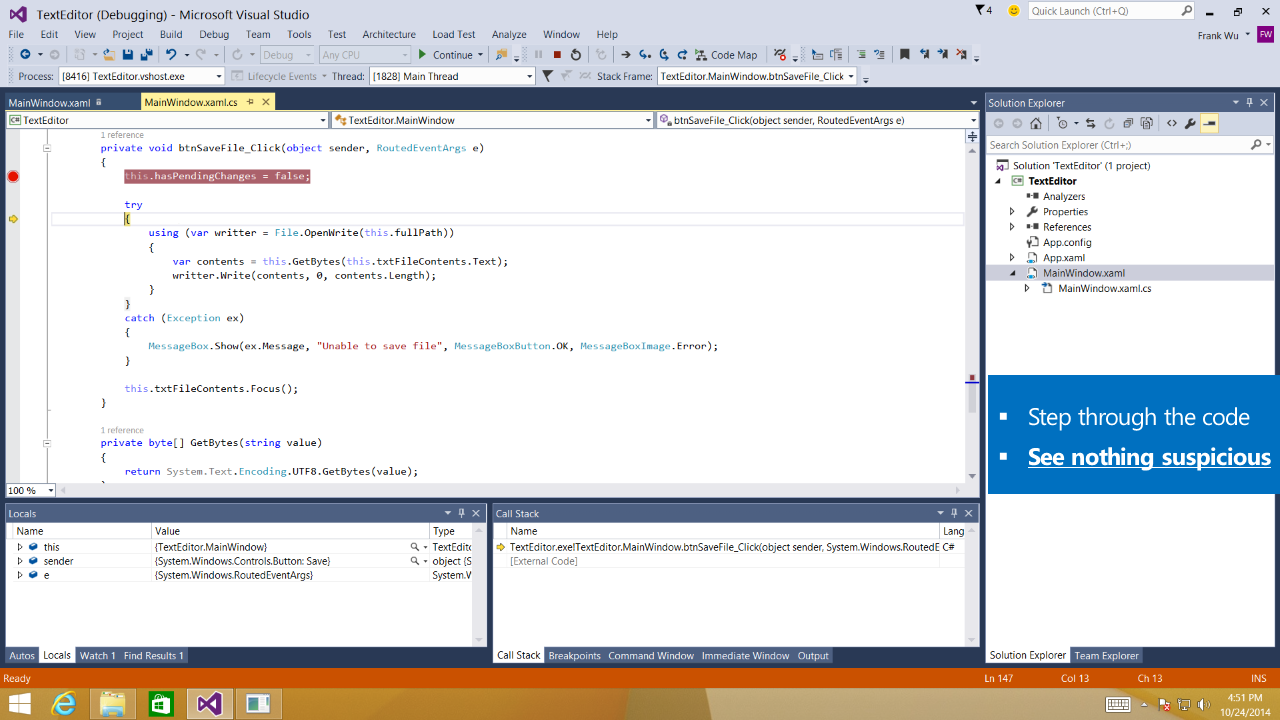

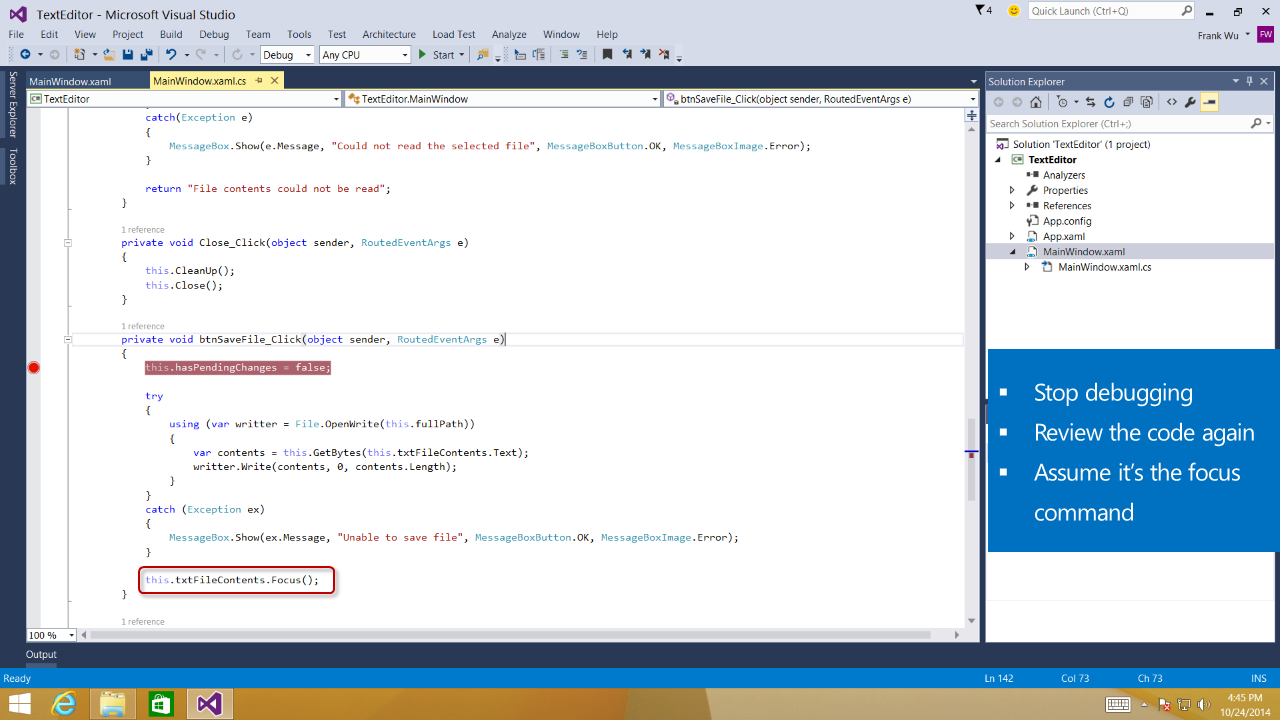

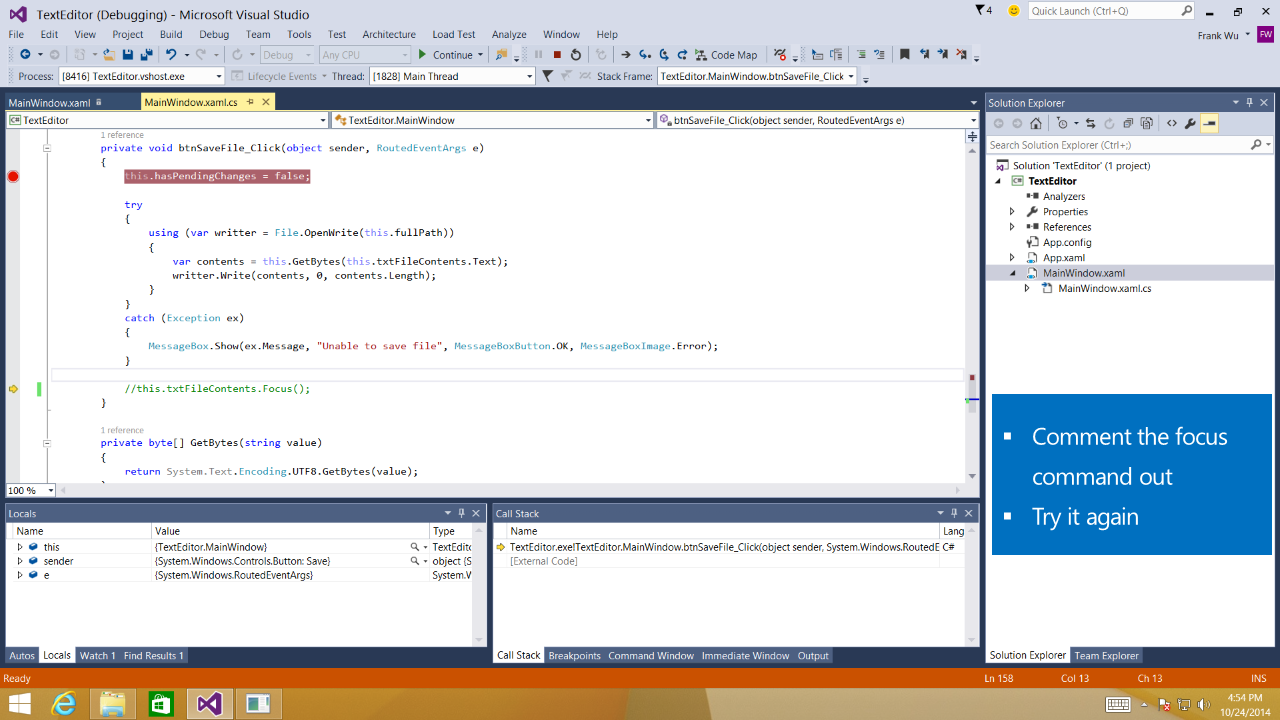

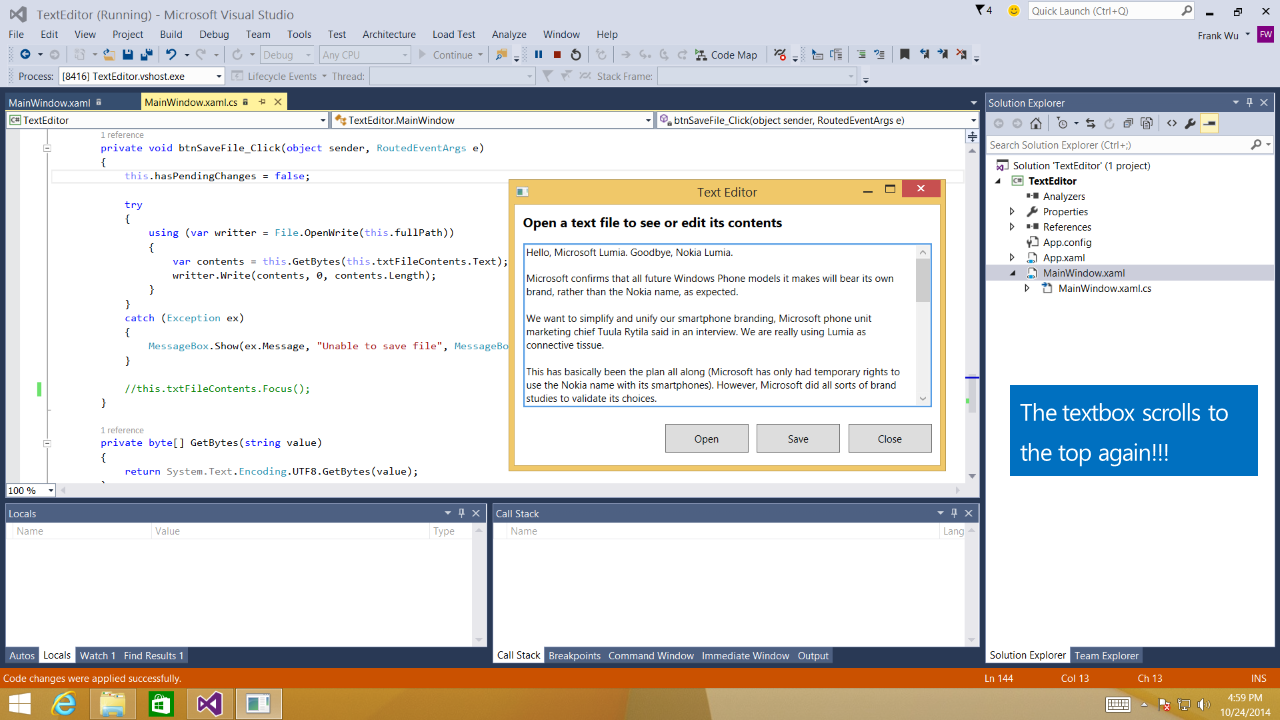

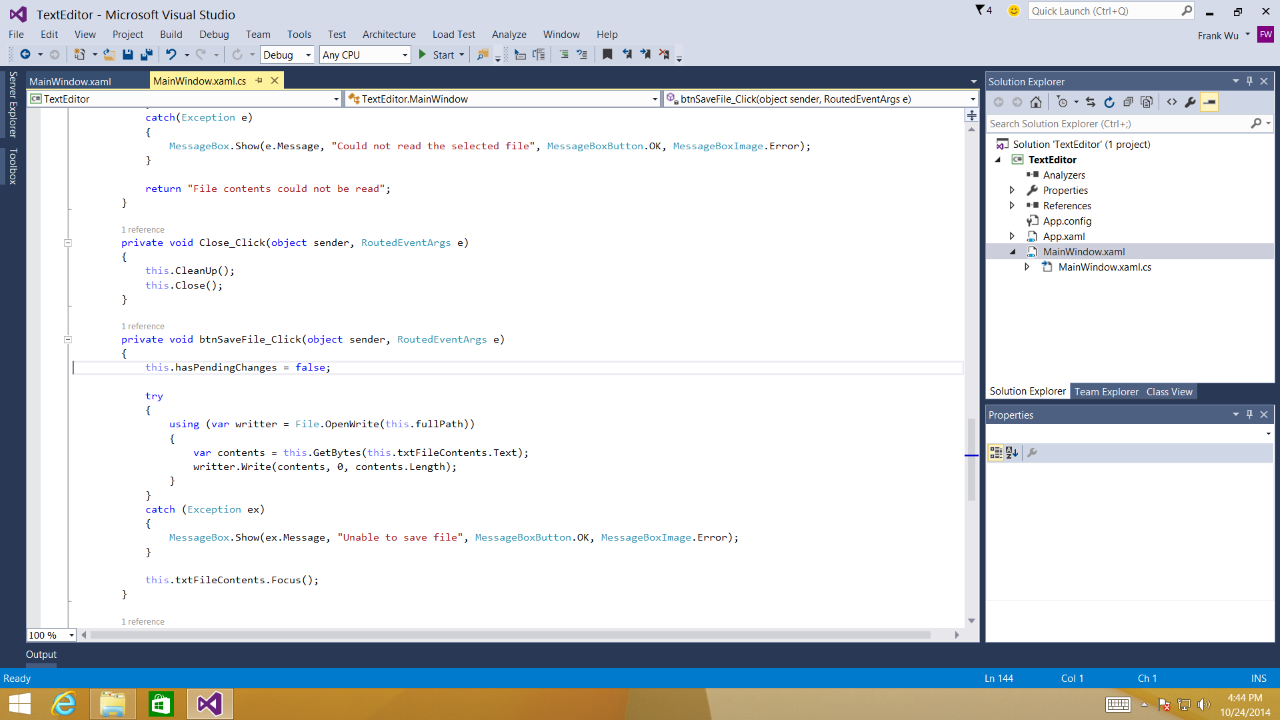

Final Design

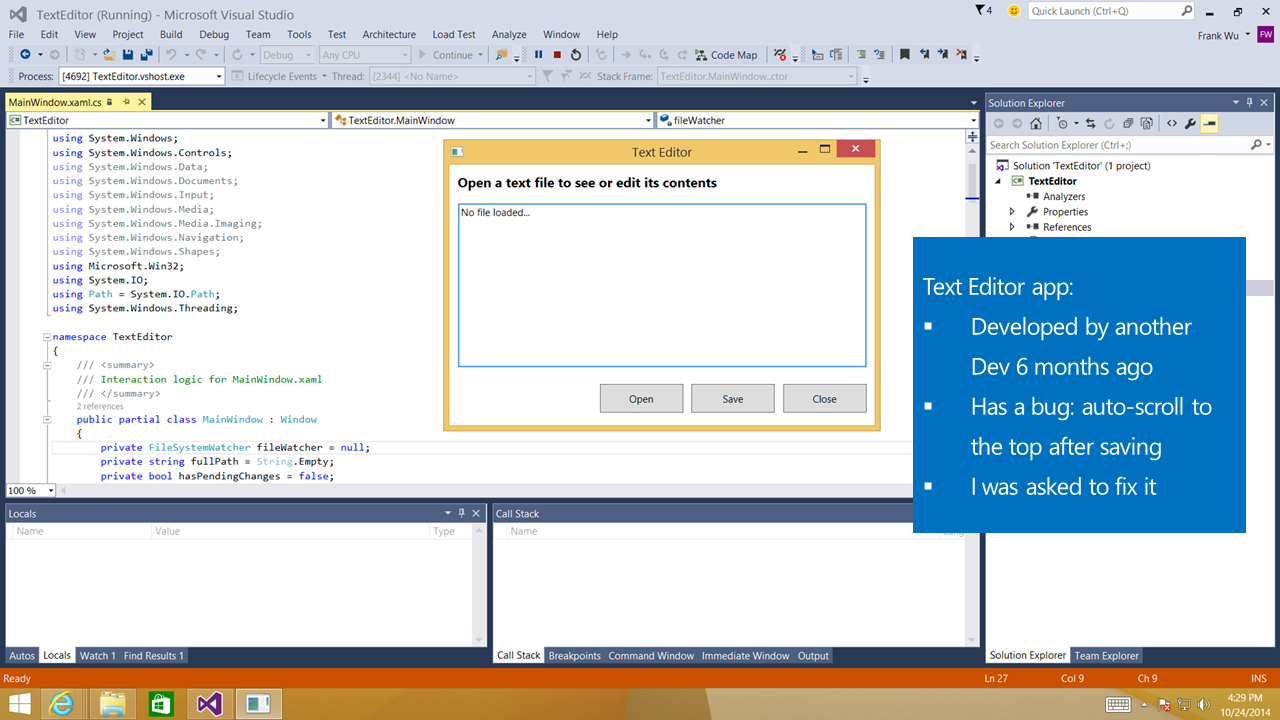

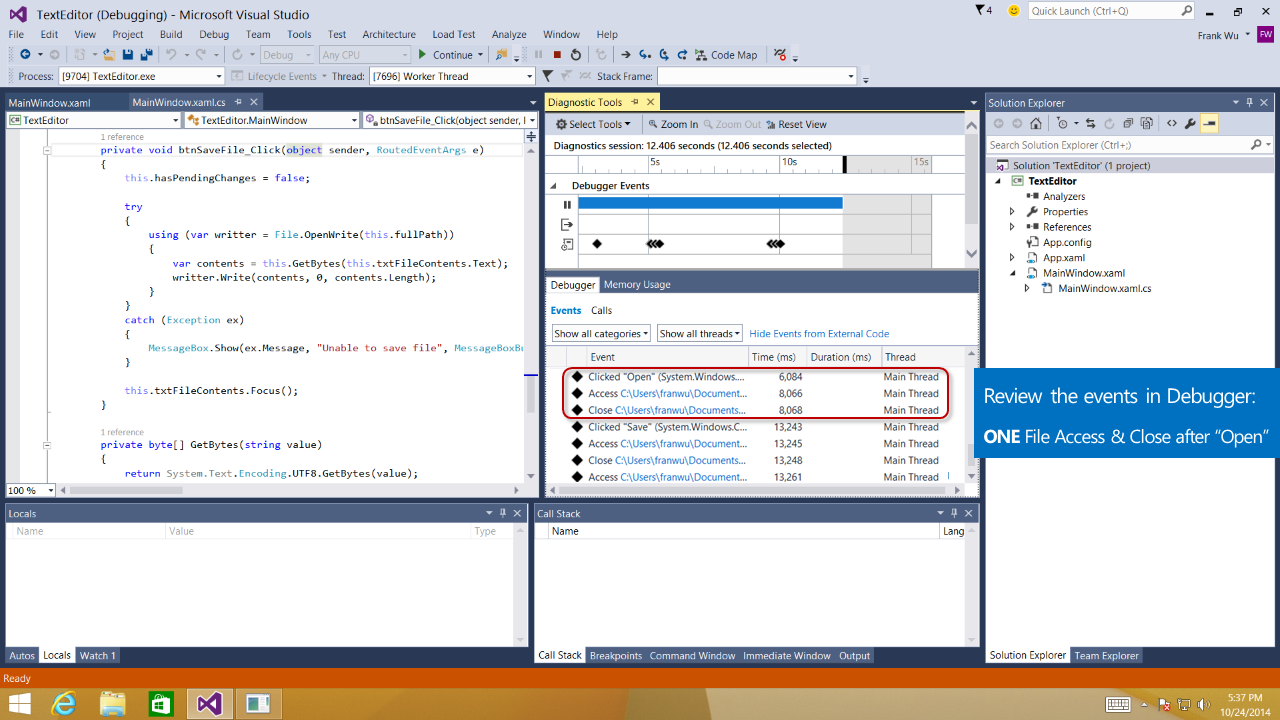

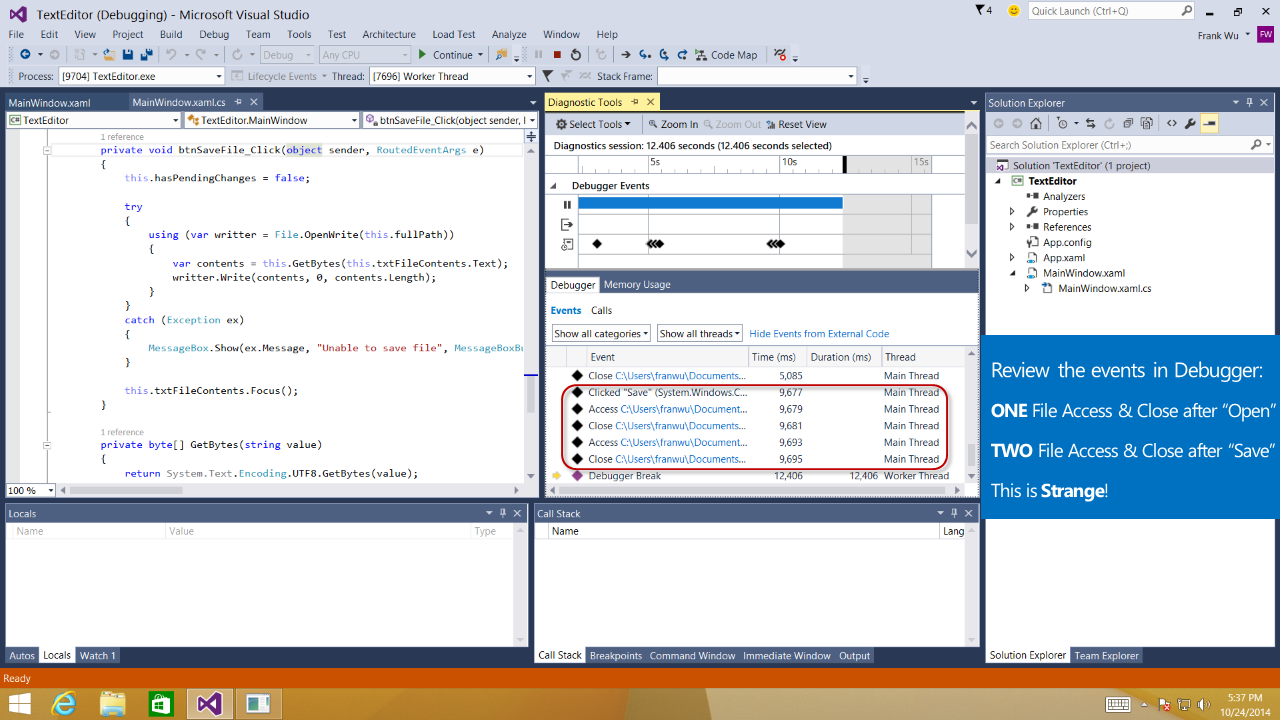

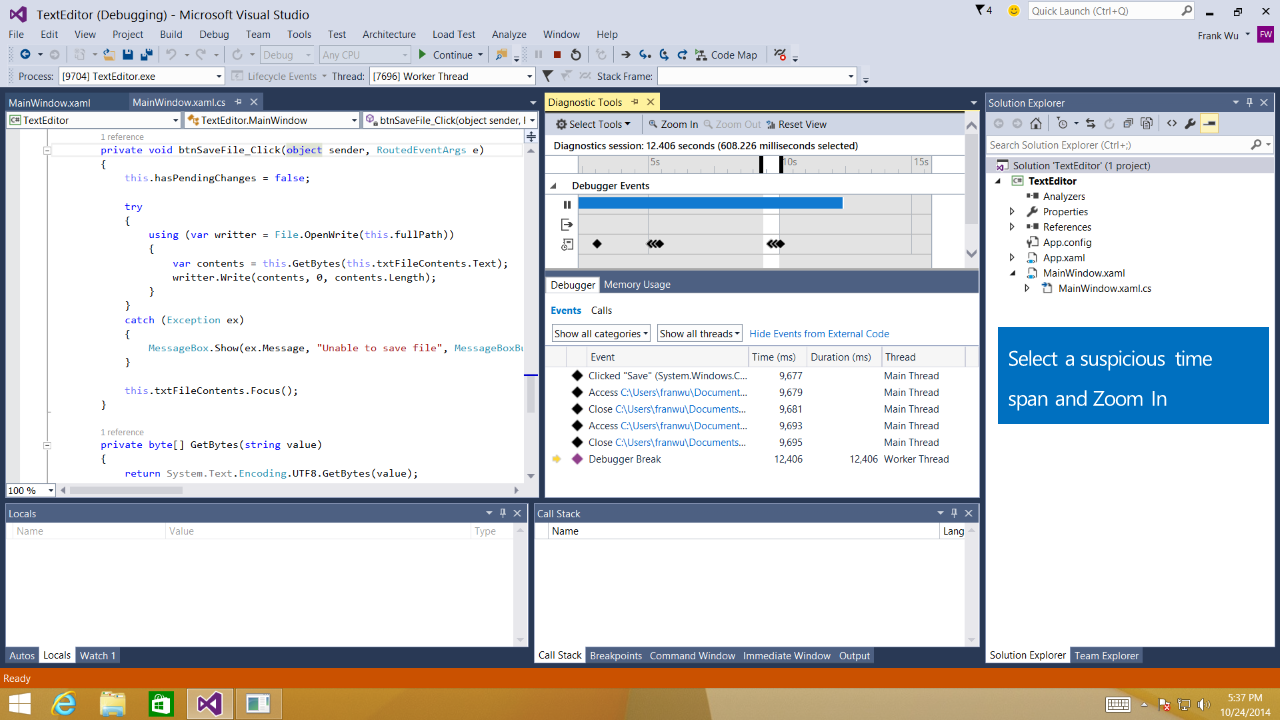

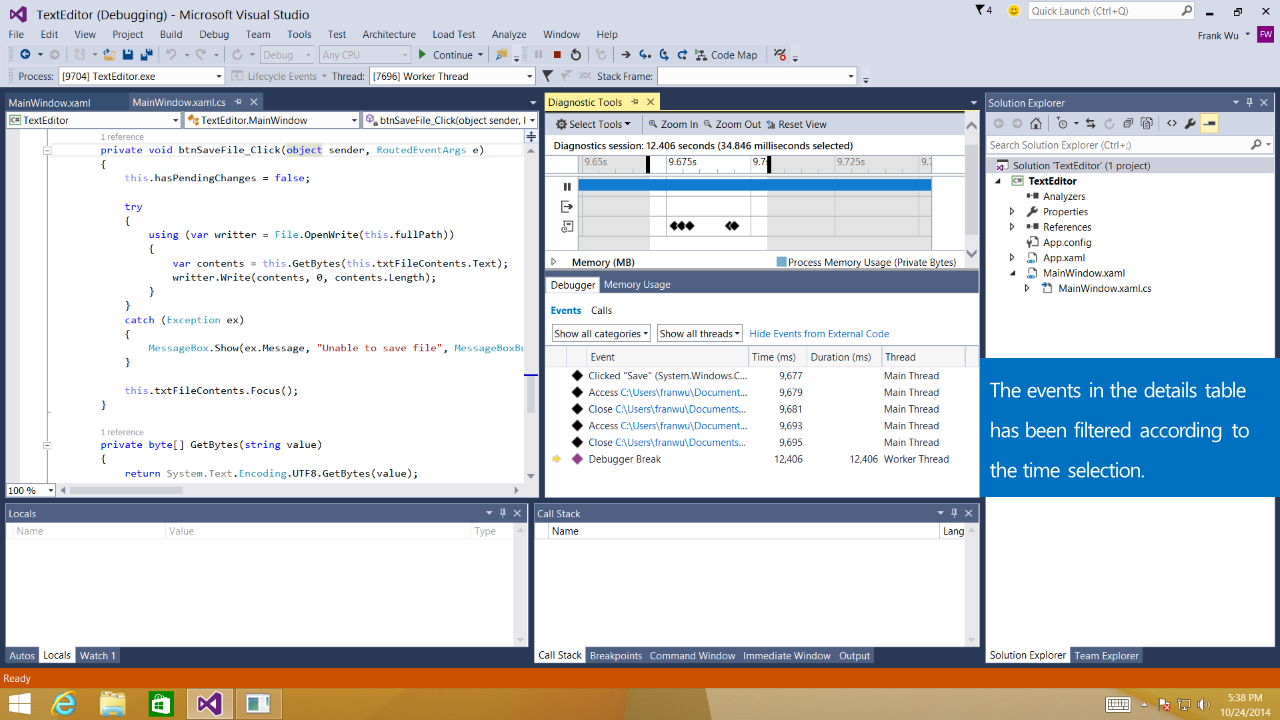

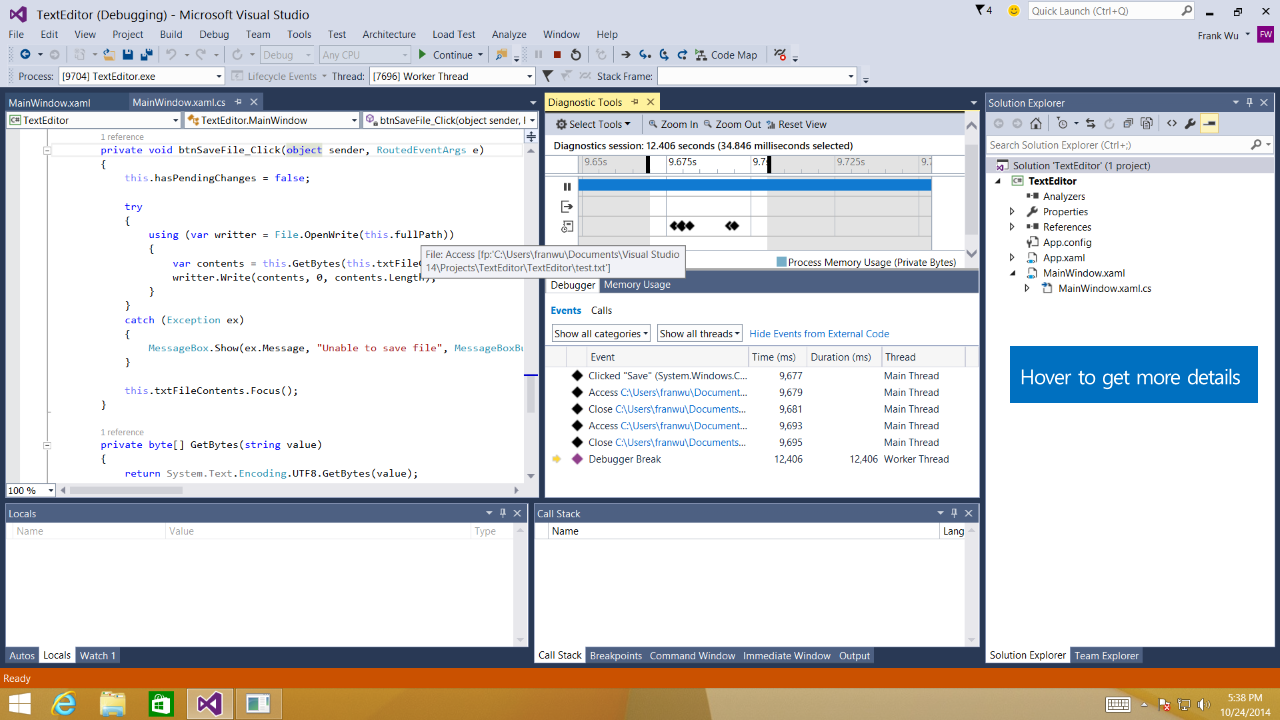

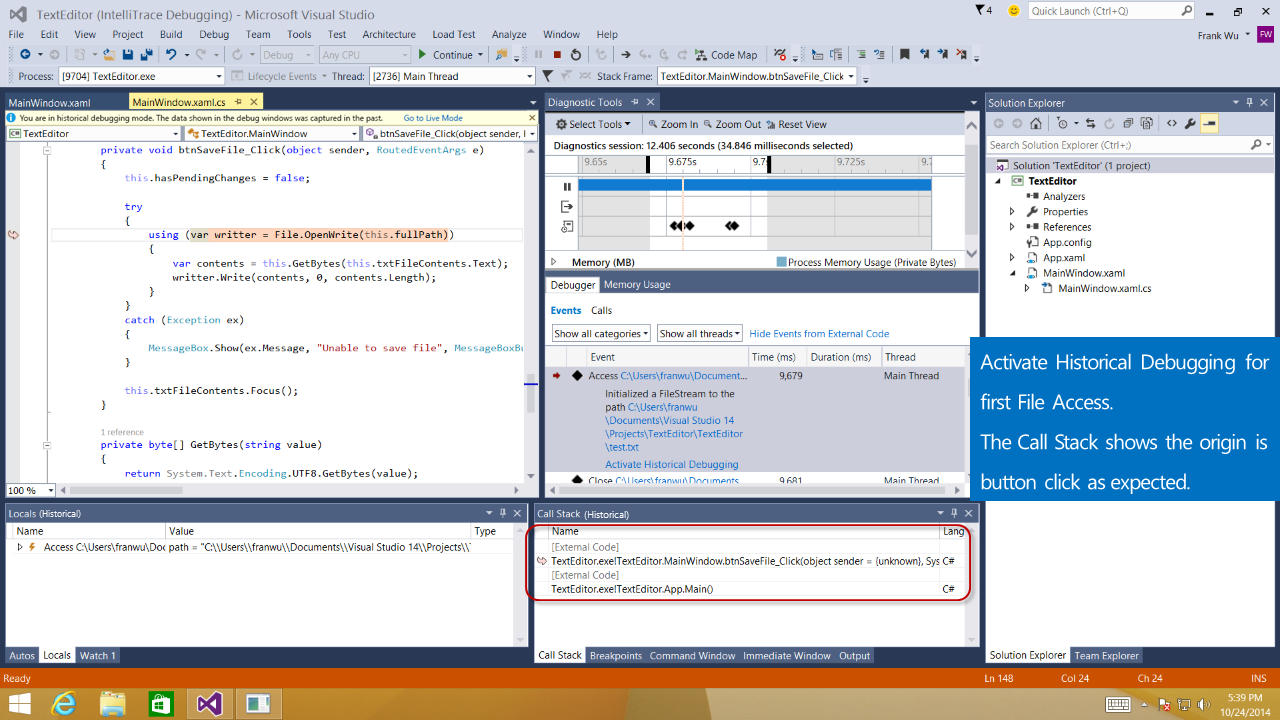

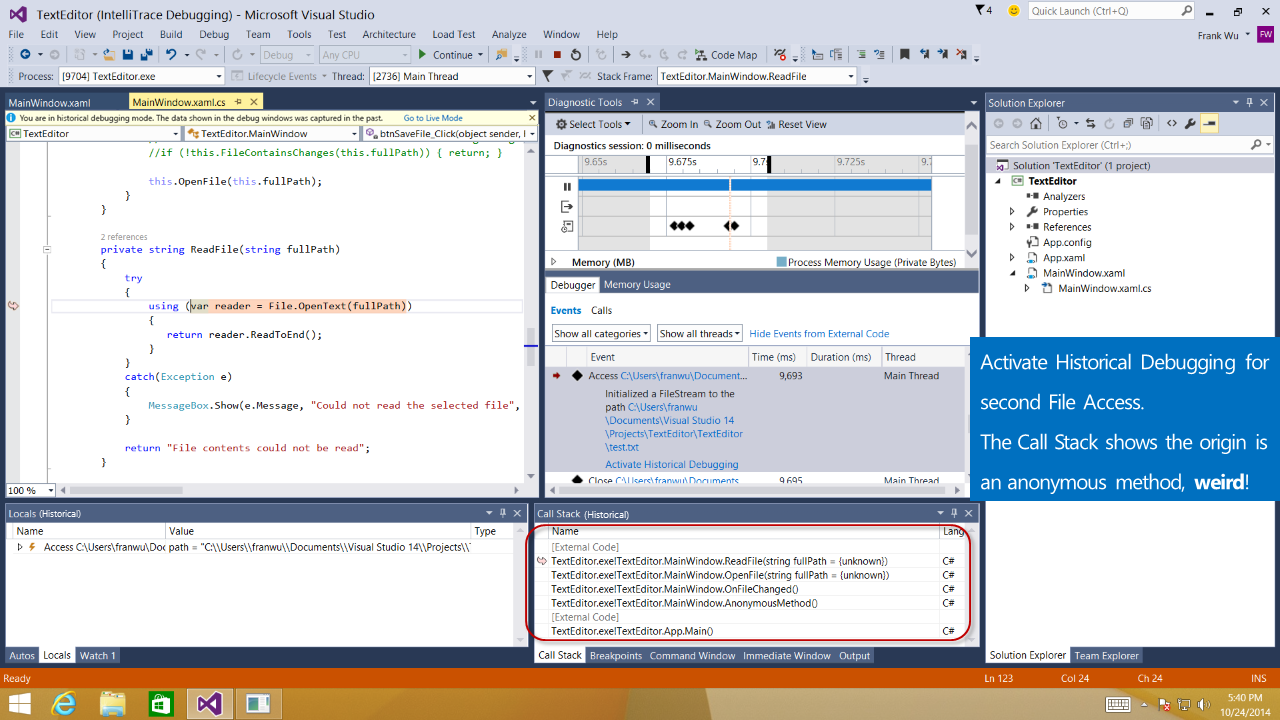

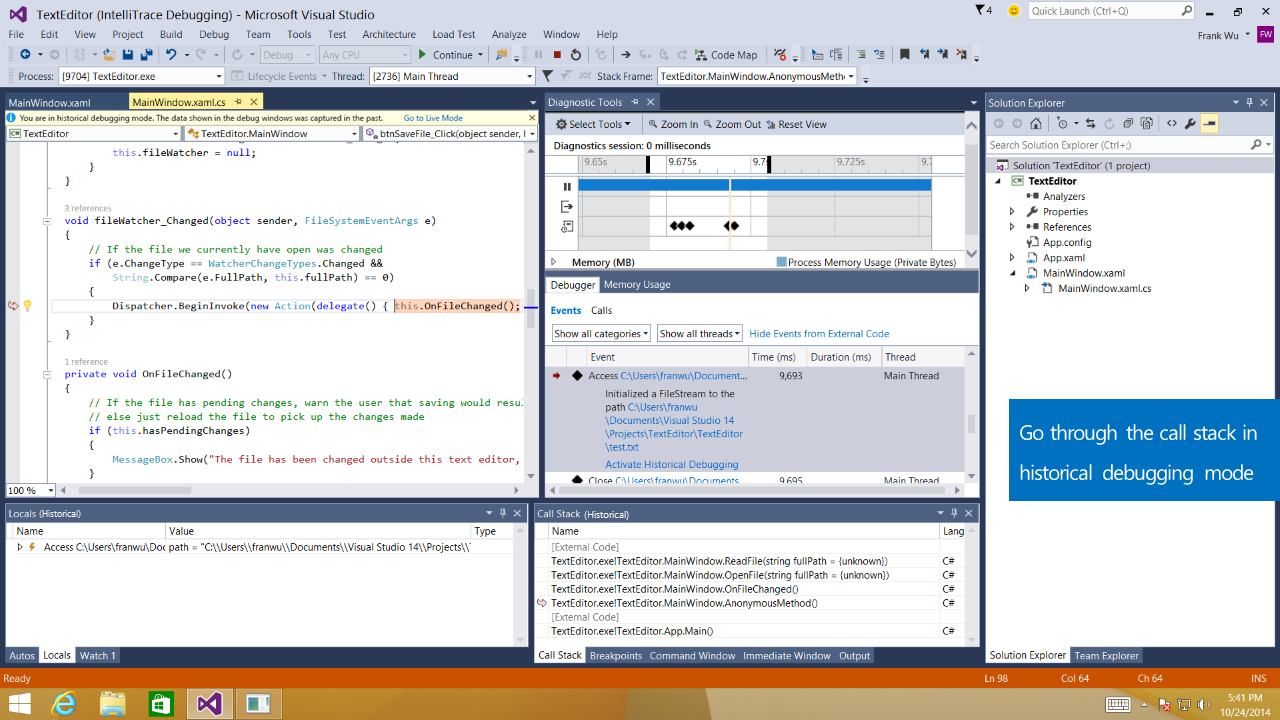

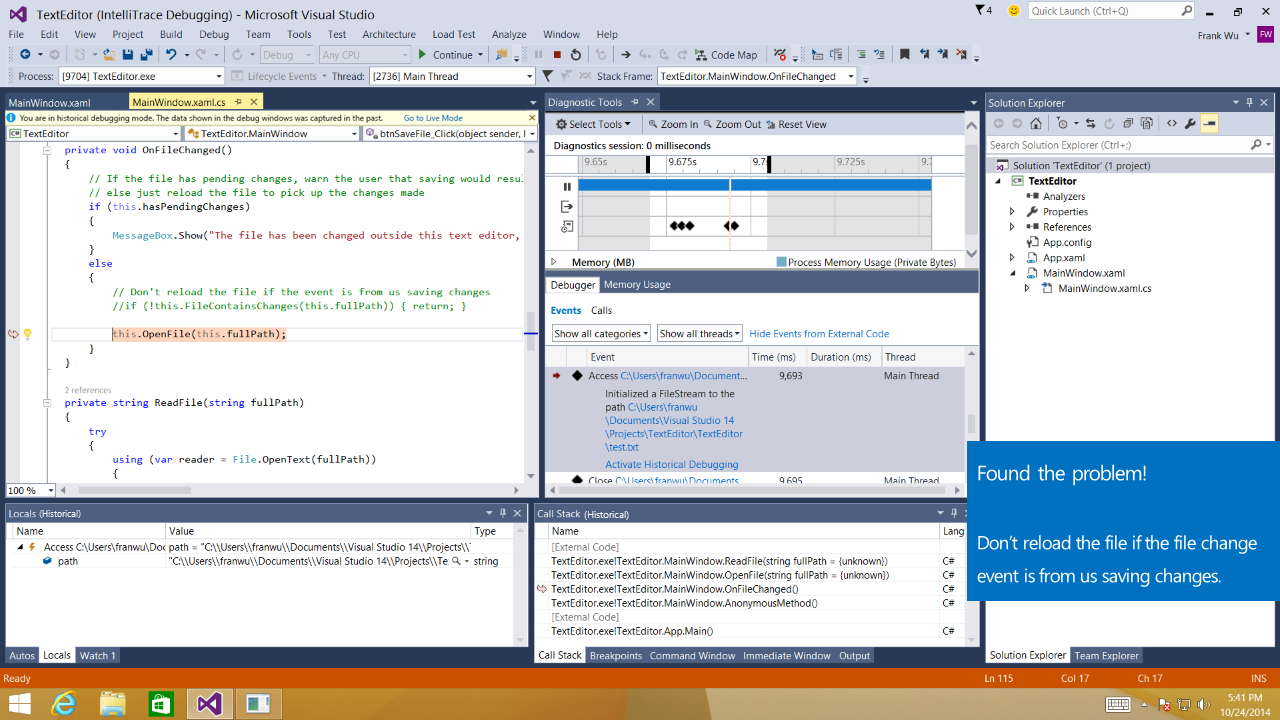

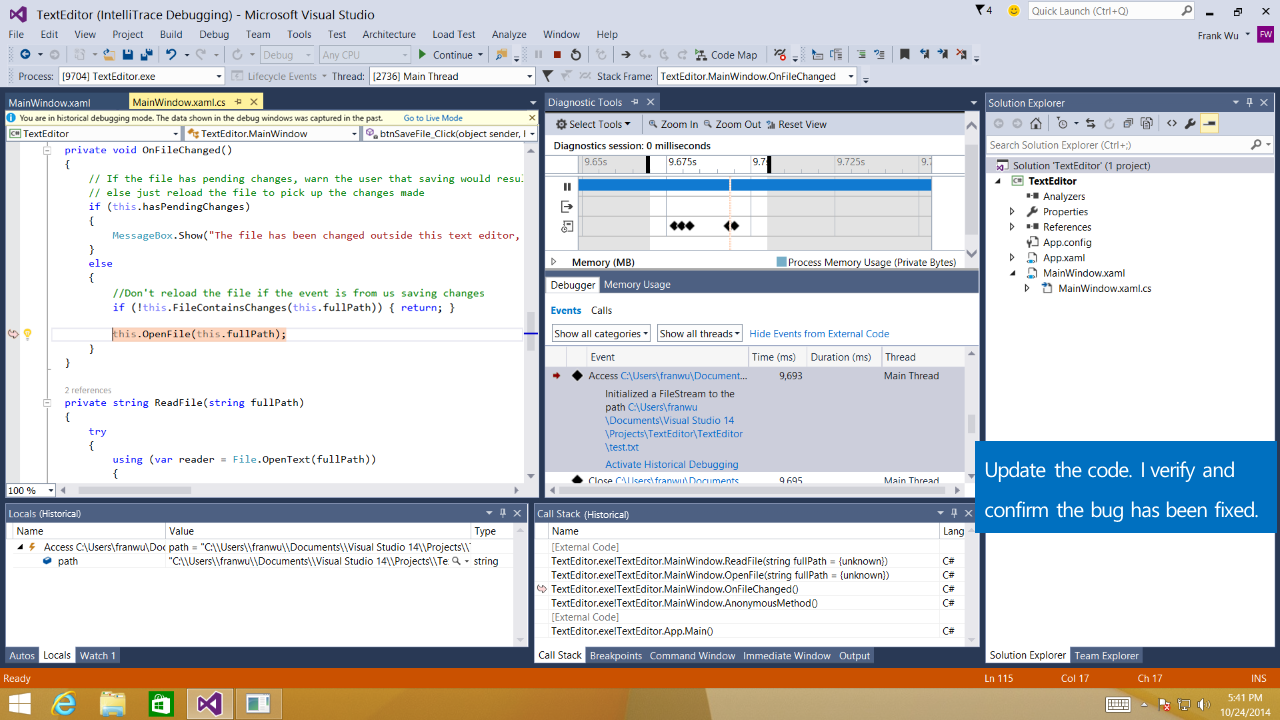

After a lot of design iterations, we found the solution which delighted the users and achieved our goals. The below is the story which shows the comparison between old experience and new experience. In old experience, the users need 3 hours to fix a bug. In the new experience, it only took him 15 minutes. The walkthrough is a little bit long. But it’s important to show the value of the new experience in the context of our customers’ daily life.

The result

The new performance hub got great success. It demoed in multiple conferences as the most important feature for recent release. The customer feedback are overwhelmingly positive. The whole team are very proud of what we shipped: we made our customers’ life easier.